Database Reference

In-Depth Information

Table 7.1

Conditional Entropy Example

Cellular Telephone Unknown

P(contact)

0.6435

0.0680

0.2885

P(subscribed=yes | contact) 0.1399

0.0809

0.0347

P(subscribed=no | contact) 0.8601

0.9192

0.9653

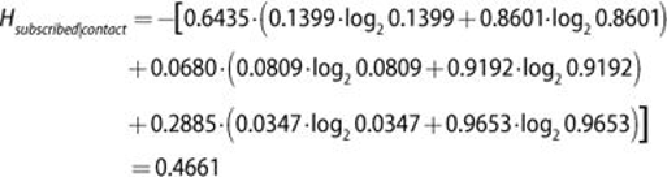

The conditional entropy of the

contact

attribute is computed as shown here.

Computation inside the parentheses is on the entropy of the class labels within

a single

contact

value. Note that the conditional entropy is always less than

or equal to the base entropy—that is, . The conditional

entropy is smaller than the base entropy when the attribute and the outcome are

correlated. In the worst case, when the attribute is uncorrelated with the outcome,

the conditional entropy equals the base entropy.

The information gain of an attribute

A

is defined as the difference between the base

entropy and the conditional entropy of the attribute, as shown in

Equation 7.3

.

In the bank marketing example, the information gain of the

contact

attribute is

shown in

Equation 7.4

.

Information gain compares the degree of purity of the parent node before a split

with the degree of purity of the child node after a split. At each split, an attribute

with the greatest information gain is considered the most informative attribute.

Information gain indicates the purity of an attribute.