Database Reference

In-Depth Information

Although the volume of Big Data tends to attract the most attention, generally the

variety and velocity of the data provide a more apt definition of Big Data. (Big

Data is sometimes described as having 3 Vs: volume, variety, and velocity.) Due to

its size or structure, Big Data cannot be efficiently analyzed using only traditional

databases or methods. Big Data problems require new tools and technologies to

store, manage, and realize the business benefit. These new tools and technologies

enable creation, manipulation, and management of large datasets and the storage

environments that house them. Another definition of Big Data comes from the

McKinsey Global report from 2011:

Big Data is data whose scale,

distribution, diversity, and/or timeliness require the use of new

technicalarchitecturesandanalyticstoenableinsightsthatunlocknew

sources of business value.

McKinsey & Co.; Big Data: The Next Frontier for Innovation, Competition, and Productivity

[1]

McKinsey's definition of Big Data implies that organizations will need new data

architectures and analytic sandboxes, new tools, new analytical methods, and an

integration of multiple skills into the new role of the data scientist, which will

be discussed in Section 1.3.

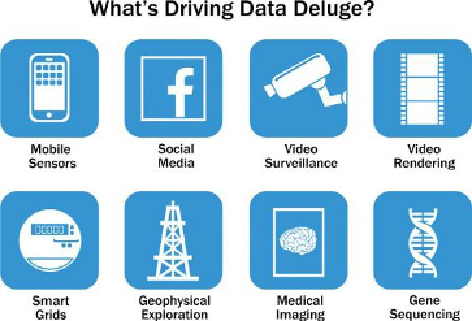

Figure 1.1

highlights several sources of the Big Data

deluge.

Figure 1.1

What's driving the data deluge

The rate of data creation is accelerating, driven by many of the items in

Figure 1.1

.

Social media and genetic sequencing are among the fastest-growing sources of Big

Data and examples of untraditional sources of data being used for analysis.