Database Reference

In-Depth Information

The remainder of this section focuses on the general methods for inferring

parameters of known distributions. For well-known distributions,

closed-form expressions for estimating parameters given data have been

developed.

Inferring Parameters

If x

1

,…,x

n

are observations of random variables drawn from an underlying

distribution F(x), the parameters of F(x) can be inferred using a method

known as “maximum likelihood estimation,” or simply “maximum

likelihood.” As the name implies, this procedure finds the values of the

parameters of the distribution that maximize the likelihood function of the

distribution.

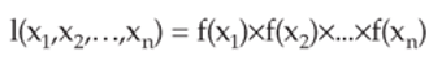

The likelihood function is simply the product of the probability density

function for each of the observed values:

Finding the value of the parameter that maximizes the likelihood function

is usually accomplished by minimizing the negative of the logarithm of the

likelihood function. Taking the derivative of the negative log likelihood,

setting the resulting derivative to 0, and then solving for the parameter will

minimize this function. If the distribution has more than one parameter, the

partial derivative is used. For example, the binomial example from earlier,

where the number of red balls for every 5 draws was observed, can be used

to estimate the number of red balls in the urn. The derivative of the negative

log likelihood simplifies to the following expression:

The binomial constant does not play a part in the derivative because it does

not depend on p and so drops out of the equation when the derivative is

taken with respect to p. Solving for p yields (x

1

+…+x

k

)/5×k where x

i

is the

number of red balls observed in each draw of 5.

Computing maximum likelihood estimates for parameters of well-known

distributions is usually not required. The maximum likelihood solutions, if