Database Reference

In-Depth Information

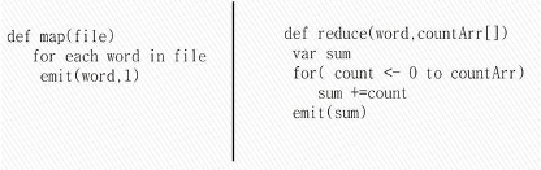

Here each map task runs locally on data node where the data resides. Then data is

implicitly shuffled and sorted by the Hadoop MapReduce framework and finally given

to reduce tasks, after the reduction process outputs it into a file.

To understand it on a lower level, you may also refer to the pseudo code representa-

tion in

Figure 5-3

.

Figure 5-3

.

Word count MapReduce algorithm

Read and Store Tweets into HDFS

Since we have followed Twitter-based examples throughout this topic, let's again use

real-time tweets for an HDFS/MapReduce example. The reason to start with HDFS is

to get started with running MapReduce over default HDFS, and then later explain how

it can be executed over an external file system (e.g., Cassandra file system). In this

section we will discuss how to set up and fetch tweets about “apress”. In our example,

we will stream in some tweets about “Apress publications” and store them on HDFS.

The purpose of this recipe is to set up and configure Hadoop processes and lay the

foundation for the next recipe in the “Cassandra MapReduce Integration” section.

Reading Tweets

To start, let's build a sample Maven-based Java project to demonstrate reading tweets

from Twitter. (For more details on using Maven, you may also refer to

ht-

1.

First, let's generate a Maven project: