Graphics Reference

In-Depth Information

n(p)

normal

normal

p

normal

normal

(a)

(b)

(c)

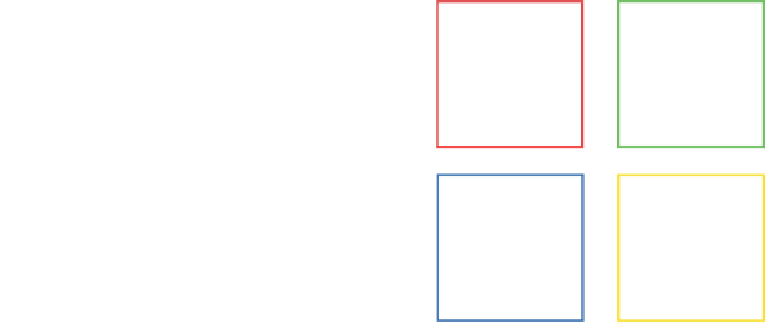

Figure 8.30.

(a) The cylindrical bins used to create a spin image. (b) Several points on a

mesh. (c) The corresponding spin images. A darker value indicates that more points are in the

corresponding bin.

Note that the cylinder can “spin” around the normal vector while still generating the

same descriptor (hence the name), avoiding the need to estimate a coordinate orien-

tation on the tangent plane at the point. The similarity between two spin images can

be simplymeasuredusing either their normalizedcross-correlationor their Euclidean

distance. Johnson and Hebert also recommended using principal component analy-

sis to reduce the dimensionality of spin images prior to comparison. Another option

is to use a multiresolution approach to construct a hierarchy of spin images at each

point with different bin sizes [

121

].

Shape contexts

were originally proposed by Belongie et al. [

38

] for 2D shapes

and extended to 3D point clouds by Frome et al. [

155

]. As illustrated in Figure

8.31

,

a 3D shape context also creates a histogram using bins centered around the selected

point, but the bins are sections of a sphere. The partitions are uniformly spaced in the

azimuth angle and normal direction, and logarithmically spaced in the radial direc-

tion. Since the bins now have different volumes, larger bins and those with more

points are weighted less. As with spin images, the “up” direction of the sphere is

defined by the estimated normal at the selected point. Due to the difficulty in estab-

lishing a reliable orientation on the tangent plane, one 3D shape context is compared

to another by fixing one descriptor and evaluating its minimal Euclidean distance

over the descriptors generated by several possible rotations around the normal of the

other point.

However, neither approach specifies a method for reliably, repeatably choosing

the 3D points around which the descriptors are based. In practice, a set of feature

points from one scan is chosen randomly and compared to all the descriptors from

the points in the other scan. While this approach works moderately well for small,

complete, uncluttered 3Dmodels of single objects, it can lead to slow or poor-quality

matching for large, complex scenes.

As we mentioned earlier, LiDAR scanners are often augmented with RGB cameras

that can associate each 3Dpoint with a color. Actually, the associated image is usually