Database Reference

In-Depth Information

C

0

C

1

C

2

C

3

C

4

GPFS

GPFS

GPFS

GPFS

GPFS

VSD

VSD

VSD

VSD

VSD

Federation Switch

IOS

0

IOS

1

IOS

2

To k e n

Mgmt.

Server

VSD

VSD

VSD

RAID

RAID

RAID

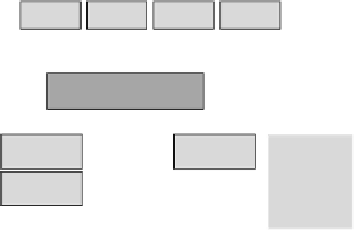

Figure 2.2

GPFS deployments include software on clients and servers.

Clients communicate through a virtual shared disk (or network shared disk)

component to access storage attached to I/O servers. Redundant links con-

nect I/O servers to storage so that storage is accessible from more than one

I/O server. A token management server coordinates access by clients to the

shared storage space.

a large stripe unit, such as 4 MB. This allows for the maximum possible

concurrency, but it is not ecient for small files.

For reliability, these arrays are often configured as RAIDs. GPFS also sup-

ports a replication feature that can be used with or without underlying RAID

to increase the volume's ability to tolerate failures; this feature is often used

just on metadata to ensure that even a RAID failure doesn't destroy the entire

file system. Moreover, if storage is attached to multiple servers, these redun-

dant paths can be used to provide continued access to storage even when some

servers, or their network or storage links, have failed. Figure 2.2 shows an ex-

ample of such a deployment, with clients running GPFS and VSD software

and servers providing access to RAIDs via VSD.

This diagram also shows the token management server, an important com-

ponent in the GPFS system. GPFS uses a lock-based approach for coordinat-

ing access between clients, and the token management server is responsible

for issuing and reclaiming these locks. Locks are used both to control access

to blocks of file data and to control access to portions of directories and allow

GPFS to support the complete POSIX I/O standard. Clients obtain locks

prior to reading or writing blocks, in order to maintain consistency, and like-

wise obtain locks on portions of directories prior to reading the directory or

creating or deleting files.