Database Reference

In-Depth Information

Best Fit:

This technique involves a greedy algorithm that searches all pos-

sible combinations of the data transfer requests in the queue and finds

the combination that utilizes the remote storage space best. Of course,

it comes with a cost, which is a very high complexity and long search

time. Especially in the cases where there are thousands of requests in

the queue, this technique would perform very poorly.

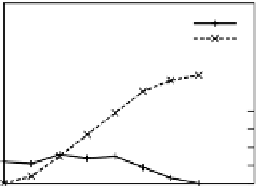

Using a simple experiment setting, we will display how the built-in storage

management capability of the data placement scheduler can help improve

both overall performance and reliability of the system. In this experiment, we

want to process 40 gigabytes of data, which consists of 60 files each between

500 megabytes and 1 gigabyte. First, the files need to be transferred from

the remote storage site to the staging site near the compute pool where the

processing will be done. Each file will be used as an input to a separate

process, which means there will be 60 computational jobs followed by the 60

transfers. The staging site has only 10 gigabytes of storage capacity, which

puts a limitation on the number of files that can be transferred and processed

at any time.

A traditional scheduler would simply start all of the 60 transfers concur-

rently since it is not aware of the storage space limitations at the destination.

After a while, each file would have around 150 megabytes transferred to the

destination. But suddenly, the storage space at the destination would get

filled, and all of the file transfers would fail. This would follow with the failure

of all of the computational jobs dependent on these files.

On the other hand, Stork completes all transfers successfully by smartly

managing the storage space at the staging site. At any time, no more than

the available storage space is committed, and as soon as the processing of a

file is completed, it is removed from the staging area to allow transfer of new

files. The number of transfer jobs running concurrently at any time and the

amount of storage space committed at the staging area during the experiment

are shown in Figure 4.2 on the left side.

Stork

Traditional Scheduler, n = 10

Running jobs

Completed jobs

Storage committed

Running jobs

Completed jobs

Storage committed

60

60

50

50

40

40

40

40

30

30

30

30

20

20

20

20

10

10

10

10

90

0

0

90

0

0

0

10

20

30

40

50

60

70

80

0

10

20

30

40

50

60

70

80

Time (minutes)

Time (minutes)

Figure 4.2

Storage space management: stork versus traditional scheduler.