Information Technology Reference

In-Depth Information

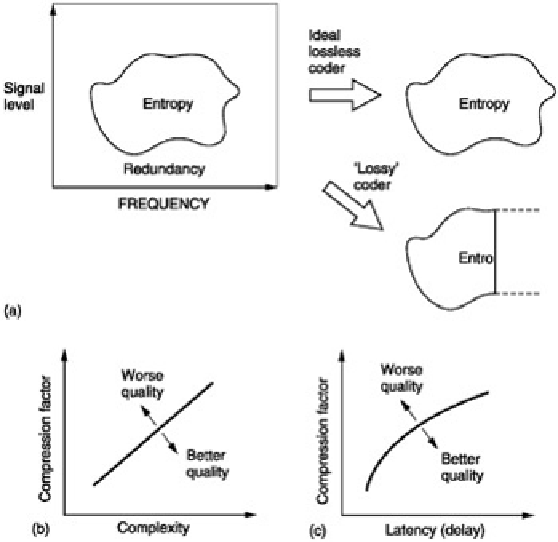

Figure 1.5:

(a) A perfect coder removes only the redundancy from the input signal and results in subjectively

lossless coding. If the remaining entropy is beyond the capacity of the channel some of it must be lost and the

codec will then be lossy. An imperfect coder will also be lossy as it fails to keep all entropy. (b) As the compression

factor rises, the complexity must also rise to maintain quality. (c) High compression factors also tend to increase

latency or delay through the system.

Entropy can be thought of as a measure of the actual area occupied by the signal. This is the area that

must

be

transmitted if there are to be no subjective differences or

artifacts

in the received signal. The remaining area is

called the

redundancy

because it adds nothing to the information conveyed. Thus an ideal coder could be imagined

which miraculously sorts out the entropy from the redundancy and only sends the former. An ideal decoder would

then re-create the original impression of the information quite perfectly. As the ideal is approached, the coder

complexity and the latency or delay both rise.

Figure 1.5

(b) shows how complexity increases with compression

factor. The additional complexity of MPEG-4 over MPEG-2 is obvious from this.

Figure 1.5

(c) shows how increasing

the codec latency can improve the compression factor.

Obviously we would have to provide a channel which could accept whatever entropy the coder extracts in order to

have transparent quality. As a result moderate coding gains which only remove redundancy need not cause

artifacts and result in systems which are described as

subjectively lossless

. If the channel capacity is not sufficient

for that, then the coder will have to discard some of the entropy and with it useful information. Larger coding gains

which remove some of the entropy must result in artifacts. It will also be seen from

Figure 1.5

that an imperfect

coder will fail to separate the redundancy and may discard entropy instead, resulting in artifacts at a sub-optimal

compression factor.

A single variable-rate transmission or recording channel is traditionally unpopular with channel providers, although

newer systems such as ATM support variable rate. Digital transmitters used in DVB have a fixed bit rate. The

variable rate requirement can be overcome by combining several compressed channels into one constant rate

transmission in a way which flexibly allocates data rate between the channels. Provided the material is unrelated,

the probability of all channels reaching peak entropy at once is very small and so those channels which are at one

instant passing easy material will make available transmission capacity for those channels which are handling

difficult material. This is the principle of statistical multiplexing.

Where the same type of source material is used consistently, e.g. English text, then it is possible to perform a

statistical analysis on the frequency with which particular letters are used. Variable-length coding is used in which

frequently used letters are allocated short codes and letters which occur infrequently are allocated long codes. This

results in a lossless code. The well-known Morse code used for telegraphy is an example of this approach. The

letter e is the most frequent in English and is sent with a single dot. An infrequent letter such as z is allocated a

long complex pattern. It should be clear that codes of this kind which rely on a prior knowledge of the statistics of

the signal are only effective with signals actually having those statistics. If Morse code is used with another

language, the transmission becomes significantly less efficient because the statistics are quite different; the letter z,

for example, is quite common in Czech.

The Huffman code is also one which is designed for use with a data source having known statistics. The probability

of the different code values to be transmitted is studied, and the most frequent codes are arranged to be