Hardware Reference

In-Depth Information

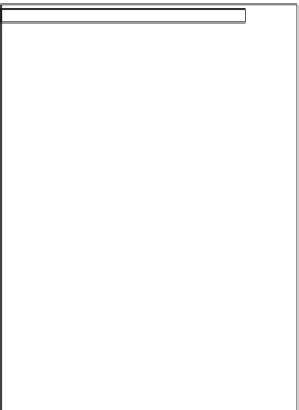

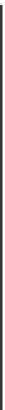

(cycles/MB)

0

1200

TRF

T*, Q**, Inverse T,

Inverse Q

I

P

B

I

P

B

I

P

B

I

P

B

I

P

B

I

P

B

Enc.

Processing cycles/MB

FME

Fine ME***

MC****

DEB

De-blocking

filter

TRF

Inverse T,

Inverse Q

FME

MC

Cycle of fetching

by the 3 PUs

Ratio of cycles

for fetching to

overall cycles for

1-MB-processing

per PU (%)

Dec.

*T: Transform

**Q: Quantization

***ME: Motion estimation

****MC: Motion compensation

DEB

De-blocking

filter

Picture type

0

10

20

30

50

100

(%)

Fig. 3.90

Evaluation of performance and efficiency in instruction fetching of PIPE acting as modules

of the image processing unit in H.264 video processing

The hardware controls source and destination register pointers with multiple cycles.

This architectural concept provides parallelism for vertical data. Figure

3.89b

shows a

basic SIAD ALU structure. This dataflow goes through mapping logics, multipliers,

sigma adders, and barrel shifters in a pipeline. Each data path is similar to the general

SIMD structure, but the total structure differs in how source data are supplied.

Each PIPE also has a local DMA controller for communication with the other

PIPE modules and with the hard-wired modules (e.g., coarse motion estimator,

symbol coder). Connecting multiple PIPEs in series to form the macroblock-based

pipeline modules provides strong parallel computing performance and scalability

for the video codec (as described in Fig.

3.82

).

3.4.4

Implementation Results

Figure

3.90

shows the performance and instruction-fetching efficiency of PIPEs

acting as the TRF, FME, and DEB modules of an image processing unit. As the

figure indicates, the average time for fetching from the shared instruction memory is

around 6% (FME processing for H.264 encoding) to 19% (TRF processing for H.264

encoding) of the PU processing cycles. Each PU fetches an instruction every 5-16

cycles. This corresponds to 18-58% of macroblock-processing cycles by the three PUs.

This helps to achieve lower power consumption than would be the case for a typical

RISC processor, which would basically fetch an instruction every cycle. Note that

Fig.

3.90

also indicates that the average number of cycles to process a macroblock is

Search WWH ::

Custom Search