Graphics Reference

In-Depth Information

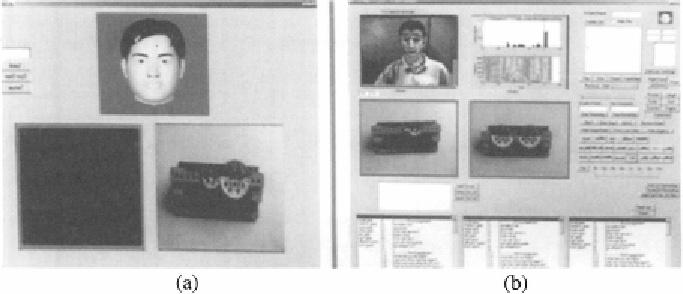

Figure 9.3.

(a) The interface for the student. (b) The interface for the instructor.

child. Preliminary results suggest that the synthetic avatar helps the children

more patient in the learning session.

2.3 Future work

In this proactive HCI environment, the facial motions of the children are very

challenging to analyze compared to database collected in controlled laboratory

conditions. One reason is that real facial motions tend to be very fast at certain

occasions. These fast motions can cause the current face tracker lose track. In

the future, we need to future improve the speed of the face tracker so that it can

capture fast facial motions in real-life conditions. Another reason is that real

facial expressions observed in real-life environment are more subtle. Therefore,

the performance of the face expression recognition can be affected. We plan to

carry out extensive studies of facial expression classification using face video

data with spontaneous expressions. In this process, we could be able to improve

the expression classifier.

Another future direction of improvements is to make the synthetic face avatar

more active so that it can better engage the children in the exploration. For

example, we plan to synthesize head movements and facial expressions in the

context of task states and user states. In this way, the avatar can be more lifelike

and responsive to users' actions in the interaction. One possible direction is

explore the correlation of speech and head movements as the work by Graf et

al. [Graf et al., 2002].

3. Summary

In this chapter, we have described two applications of our 3D face processing

framework. One application is to use face processing techniques to encode face

Search WWH ::

Custom Search