Java Reference

In-Depth Information

Oh, and of course, if any of these external network services are slow to respond, then you'll wish

to provide partial results to your users, for example, showing your text results alongside a

generic map with a question mark in it, instead of showing a totally blank screen until the map

server responds or times out.

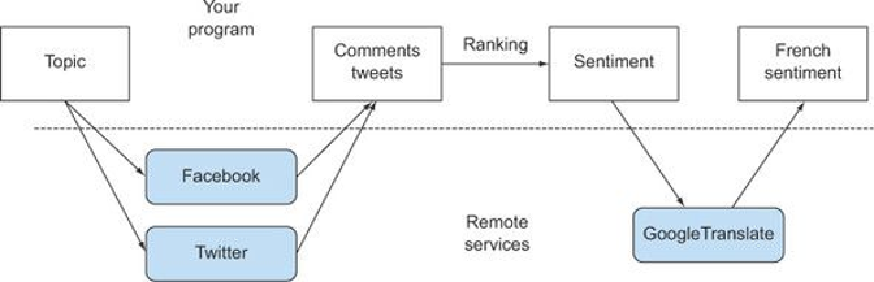

Figure 11.1

illustrates how this typical mash-up application

interacts with the remote services it needs to work with.

Figure 11.1. A typical mash-up application

To implement a similar application, you'll have to contact multiple web services across the

internet. But what you don't want to do is block your computations and waste billions of

precious clock cycles of your CPU waiting for an answer from these services. For example, you

shouldn't have to wait for data from Facebook to start processing the data coming from Twitter.

This situation represents the other side of the multitask-programming coin. The fork/join

framework and parallel streams discussed in

chapter 7

are valuable tools for parallelism; they

split an operation into multiple suboperations and perform those suboperations in parallel on

different cores, CPUs, or even machines.

Conversely, when dealing with concurrency instead of parallelism, or when your main goal is to

perform several loosely related tasks on the same CPUs, keeping their cores as busy as possible

to maximize the throughput of your application, what you really want to achieve is to avoid

blocking a thread and wasting its computational resources while waiting, potentially for quite a

while, for a result from a remote service or from interrogating a database. As you'll see in this

chapter, the Future interface and particularly its new CompletableFuture implementation are

your best tools in such circumstances.

Figure 11.2

illustrates the difference between parallelism

and concurrency.

Search WWH ::

Custom Search