Database Reference

In-Depth Information

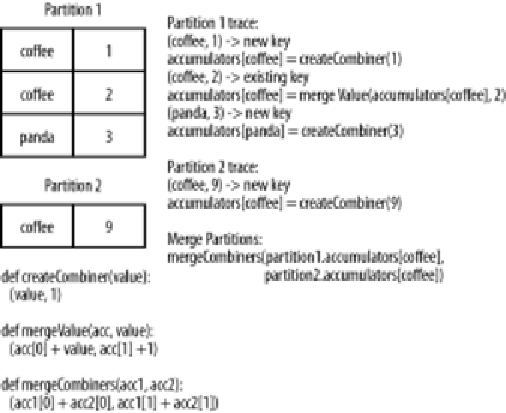

Figure 4-3. combineByKey() sample data flow

There are many options for combining our data by key. Most of them are imple‐

mented on top of

combineByKey()

but provide a simpler interface. In any case, using

one of the specialized aggregation functions in Spark can be much faster than the

naive approach of grouping our data and then reducing it.

Tuning the level of parallelism

So far we have talked about how all of our transformations are distributed, but we

have not really looked at how Spark decides how to split up the work. Every RDD has

a fixed number of

partitions

that determine the degree of parallelism to use when exe‐

cuting operations on the RDD.

When performing aggregations or grouping operations, we can ask Spark to use a

specific number of partitions. Spark will always try to infer a sensible default value

based on the size of your cluster, but in some cases you will want to tune the level of

parallelism for better performance.

Most of the operators discussed in this chapter accept a second parameter giving the

number of partitions to use when creating the grouped or aggregated RDD, as shown

in Examples

4-15

and

4-16

.