Graphics Reference

In-Depth Information

Latent Mixture of

Discriminative Experts

Wisdom of crowds

(listener backchannel)

y

1

y

3

y

n

y

2

h

2

h

3

h

n

h

1

h

1

x

1

Speaker

x

1

Words

x

1

x

2

x

3

x

n

Pitch

Look at listener

Gaze

Time

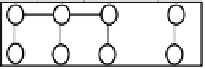

Figure 8.

The approach for modeling wisdom of crowd: (1) multiple listeners experience the

same series of stimuli (pre-recorded speakers) and (2) a Wisdom-LMDE model is learned

using this wisdom of crowds, associating one expert for each listener.

Table 1.

Comparison of the prediction model with previously published approaches. By

integrating the knowledge from multiple listener, the Wisdom-LMDE is able to

identify prototypical patterns in interpersonal dynamic.

Model

Wisdom

of Crowds

Precision

Recall

F1-Score

Wisdom LMDE

Yes

0.2473

0.7349

0.3701

Consensus Classifi er (Huang et al., 2010)

Yes

0.2217

0.3773

0.2793

CRF Mixture of Experts (Smith et al., 2005)

Yes

0.2696

0.4407

0.3345

AL Classifi er (CRF)

No

0.2997

0.2819

0.2906

AL Classifi er (LDCRF) (Morency et al., 2007)

No

0.1619

0.2996

0.2102

Multimodel LMDE (Ozkan et al., 2010)

No

0.2548

0.3752

0.3035

Random Classifi er

No

0.1042

0.1250

0.1018

Rule Based Classifi er (Ward et al., 2000)

No

0.1381

0.2195

0.1457

feedback such as head nod may, at first, look like a conversational

signal (“I acknowledge what you said”), it can also be interpreted

as an emotional signal where the person is trying to show empathy

or a social signal where the person is trying to show dominance by

expressing a strong head nod. The complete study of human face-to-

face communication needs to take into account these different types

of signals: linguistic, conversational, emotional and social. In all four

cases, the individual and interpersonal dynamics are keys to a coherent

interpretation.

Search WWH ::

Custom Search