Graphics Reference

In-Depth Information

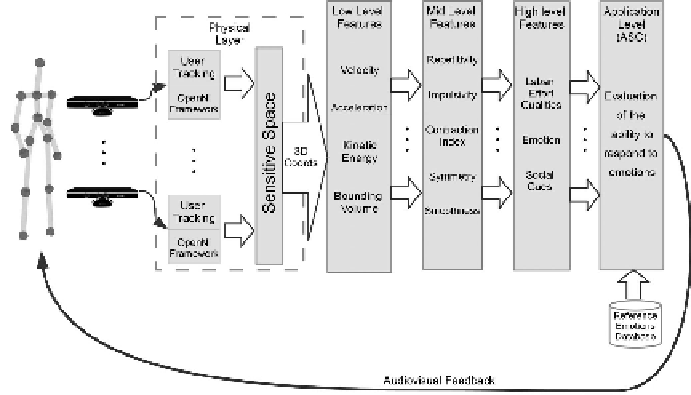

That is, the computed features are used to analyze users' nonverbal

behavior, the emotions they express, and the level of social interaction.

For this purpose we use the EyesWeb XMI software platform (Camurri

et al., 2004b; Camurri et al., 2007), video cameras or Kinect sensors,

and we extract several low-level movement features (e.g., movement

energy) that are used to compute mid- and high-level features (e.g.,

impulsivity or smoothness) in a multilayered approach, possibly up to

labeling emotional states or social attitudes. The choice of these features

is motivated by previous works on the analysis of emotion using a

minimal set of features (e.g., Camurri et al., 2003; Glowinski et al., 2011).

An overview of the software architecture is sketched in Figure

2. In the following sections, we describe a subset of the proposed

framework's components. The first one is the

Physical Layer

, performing

measurements on the user's physical position in space as well as

the user's body joints configuration. The

Low-Level Features

include

those features directly describing the physical features of movements,

such as its speed, amplitude and so on. The

Mid-Level Features

can be

described by models and algorithms based on the low-level features;

for example, the movement smoothness can be computed given its

velocity and curvature.

3.1.1 Physical layer

For the purpose of the expressive gesture quality analysis described in

this case study we use one or more Kinect sensors. Kinect is a motion

sensing input device by Microsoft, originally conceived for the Xbox.

Figure 2.

Framework for multi-user nonverbal expressive gesture analysis.

Search WWH ::

Custom Search