Hardware Reference

In-Depth Information

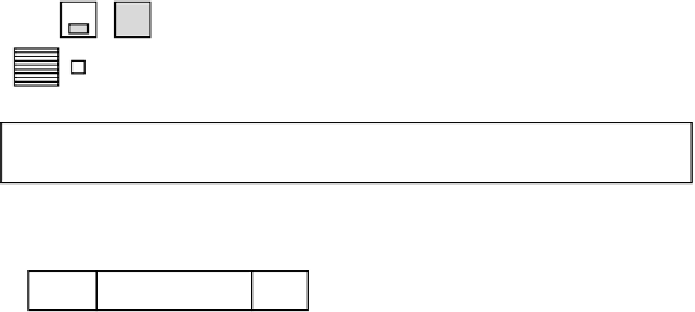

Node 0

Node 1

Node 255

CPU Memory

CPU Memory

CPU Memory

Directory

…

Local bus

Local bus

Local bus

Interconnection network

(a)

2

18

-1

Bits

8

18

6

Node

Block

Offset

(b)

4

0

3

0

2

1

82

1

0

0

0

(c)

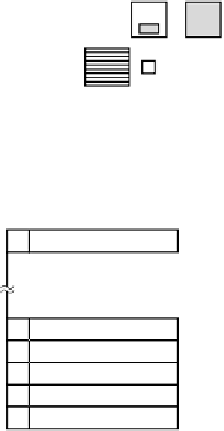

Figure 8-33.

(a) A 256-node directory-based multiprocessor. (b) Division of a

32-bit memory address into fields. (c) The directory at node 36.

send a message to node 82 instructing it to pass the line to node 20 and invalidate

its cache. Note that even a so-called ''shared-memory multiprocessor'' has a lot of

message passing going on under the hood.

As a quick aside, let us calculate how much memory is being taken up by the

directories. Each node has 16 MB of RAM and 2

18

9-bit entries to keep track of

that RAM. Thus the directory overhead is about 9

2

18

bits divided by 16 MB or

about 1.76 percent, which is generally acceptable (although it has to be high-speed

memory, which increases its cost). Even with 32-byte cache lines the overhead

would only be 4 percent. With 128-byte cache lines, it would be under 1 percent.

An obvious limitation of this design is that a line can be cached at only one

node. To allow lines to be cached at multiple nodes, we would need some way of

locating all of them, for example, to invalidate or update them on a write. Various

options are possible to allow caching at several nodes at the same time.

One possibility is to give each directory entry

k

fields for specifying other

nodes, thus allowing each line to be cached at up to

k

nodes. A second possibility

is to replace the node number in our simple design with a bit map, with one bit per

node. In this option there is no limit on how many copies there can be, but there is

a substantial increase in overhead. Having a directory with 256 bits for each

×