Hardware Reference

In-Depth Information

Memory word 1111000010101110

0

1

0

2

1

3

0

4

1

5

1

6

1

7

0

8

0

9

0

10

0

11

0

12

1

13

0

14

1

15

1

16

0

17

1

18

1

19

1

20

0

21

Parity bits

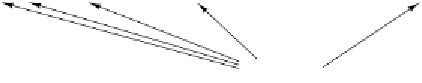

Figure 2-15.

Construction of the Hamming code for the memory word

1111000010101110 by adding 5 check bits to the 16 data bits.

Historically, CPUs have always been faster than memories. As memories have

improved, so have CPUs, preserving the imbalance. In fact, as it becomes possible

to put more and more circuits on a chip, CPU designers are using these new facili-

ties for pipelining and superscalar operation, making CPUs go even faster. Memo-

ry designers have usually used new technology to increase the capacity of their

chips, not the speed, so the problem appears to be getting worse over time. What

this imbalance means in practice is that after the CPU issues a memory request, it

will not get the word it needs for many CPU cycles. The slower the memory, the

more cycles the CPU will have to wait.

As we pointed out above, there are two ways to deal with this problem. The

simplest way is to just start memory

READ

s when they are encountered but con-

tinue executing and stall the CPU if an instruction tries to use the memory word

before it has arrived. The slower the memory, the greater the penalty when it does

occur. For example, if one instruction in five touches memory and the memory ac-

cess time is five cycles, execution time will be twice what it would have been with

instantaneous memory. But if the memory access time is 50 cycles, then execution

time will be up by a factor of 11 (5 cycles for executing instructions plus 50 cycles

for waiting for memory).

The other solution is to have machines that do not stall but instead require the

compilers not to generate code to use words before they have arrived. The trouble

is that this approach is far easier said than done. Often after a

LOAD

there is noth-

ing else to do, so the compiler is forced to insert

NOP

(no operation) instructions,

which do nothing but occupy a slot and waste time. In effect, this approach is a

software stall instead of a hardware stall, but the performance degradation is the

same.

Actually, the problem is not technology, but economics. Engineers know how

to build memories that are as fast as CPUs, but to run them at full speed, they have

to be located on the CPU chip (because going over the bus to memory is very

slow). Putting a large memory on the CPU chip makes it bigger, which makes it

more expensive, and even if cost were not an issue, there are limits to how big a