Graphics Reference

In-Depth Information

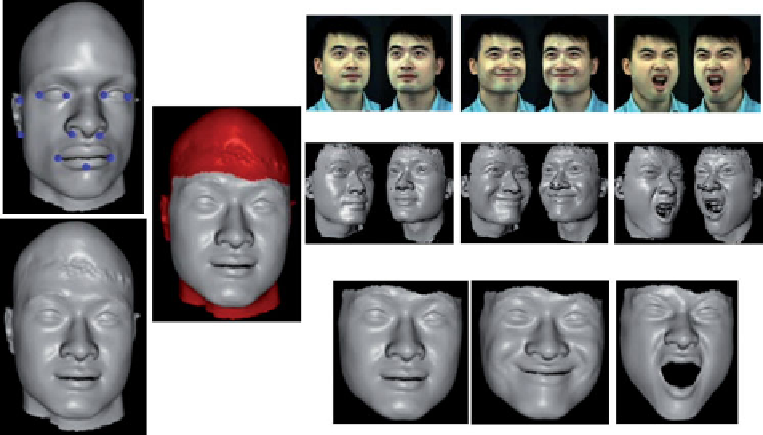

(a)

(d)

(e)

(c)

(b)

(f )

Figure 1.16

Illustration of the template fitting process. (a) A face template with manual landmarks. (b)

Obtained mesh after fitting the warped template to the first two depth maps given in (e). (c) Facial region

limitation (red colored regions present unreliable depth or optical flow estimation). (d) A sequence of

texture image pairs. (e) A sequence of depth map pairs. (f) Selected meshes after tracking the initial

mesh through the whole sequence, using both depth maps and optical flows. The process is marker-less

and automatic [from http://grail.cs.washington.edu/projects/stfaces/]

vertices motion across the facial sequence and is used to enhance template tracking by estab-

lishing inter-frame correspondences with video data. Then, they measure the consistency of

the optical flow and the vertex inter-frame motion by minimizing the defined metric. Similar

ideas were presented in Weise et al. (2009) where a person-specific facial expression model is

constructed from the tracked sequences after non-rigid fitting and tracking. The authors tar-

geted real-time puppetry animation by transferring the conveyed expressions (of an actor) to

new persons. In Weise et al. (2011) the authors deal with two challenges of performance-driven

facial animation; accurately track the rigid and non-rigid motion of the user's face, and map

the extracted tracking parameters to suitable animation controls that drive the virtual character.

The approach combines these two problems into a single optimization that estimates the most

likely parameters of a user- specific expression model given the observed 2D and 3D data.

They derive a suitable probabilistic prior for this optimization from pre-recorded animation

sequences that define the space of realistic facial expressions.

In Sun and Yin (2008), the authors propose to adapt and track a generic model to each

frame of 3D model sequences for dynamic 3D expression recognition. They establish the

vertex flow estimation as follows: First, they establish correspondences between 3D meshes

using a set of 83 pre-defined key points. This adaptation process is performed to establish

a matching between the generic model (or the source model) and the real face scan (or the

target model). Second, once the generic model is adapted to the real face model, it will be

considered as an intermediate tracking model for finding vertex correspondences. The vertex