Graphics Reference

In-Depth Information

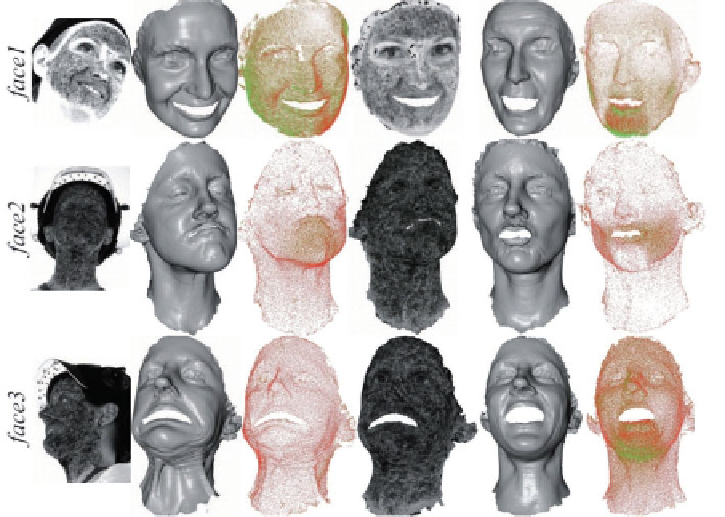

Figure 1.8

The results of motion capture approach, proposed by Furukawa and Ponce (2009), form

multiple synchronized video streams based on regularization adapted to nonrigid tangential deformation.

From left to right, a sample input image, reconstructed mesh model, estimated notion and a texture

mapped model for one frame with interesting structure/motion for each dataset 1, 2, and 3. The right two

columns show the results in another interesting frame. Copyright

C

2009, IEEE

adjacent rectified viewpoints. Observing that the difference in projection between the views

causes distortions of the comparison windows, the most prominent distortions of this kind

are compensated by a

scaled-window matching

technique. The resulting depth images are

converted to 3D points and fused into a single dense point cloud. Then, a triangular mesh

from the initial point cloud is reconstructed through three steps: the original point cloud is

downsampled using

hierarchical vertex clustering

(Schaefer and Warren, 2003). Outliers and

small-scale high frequency noise are removed on the basis of the Plane Fit Criterion proposed

by Weyrich et al. (2004) and a point normal filtering inspired by Amenta and Kil (2004),

respectively. A triangle mesh is generated without introducing excessive smoothing using

lower dimensional triangulation

methods Gopi et al. (2000).

At the last stage, in order to consistently track geometry and texture over time, a single

reference mesh from the sequence is chosen. A sequence of compatible meshes without holes is

explicitly computed. Given the initial per-frame reconstructions

G

t

, a set of compatible meshes

M

t

is generated that has the same connectivity as well as explicit vertex correspondence. To

create high quality renderings, per-frame texture maps

T

t

that capture appearance changes,

such as wrinkles and sweating of the face, are required. Starting with a single reference mesh

M

0

, generated by manually cleaning up the first frame

G

0

, dense optical flow on the video

images is computed and used in combination with the initial geometric reconstructions

G

t

to

automatically propagate

M

0

through time. At each time step, a high quality 2D face texture

T

t