Graphics Reference

In-Depth Information

X

2

X

2

X

1

X

1

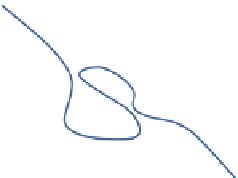

(a)

(b)

Figure 5.22

(a) Linearly separable data samples represented in a plane and separated by a straight line;

(b) non-linearly separable data samples represented in a plane and separated by a curved line

Support Vector Machine (SVM)

SVM is based on the use of functions that can optimally separate data. When considering the

case of two classes for which data are linearly separable, there exists an infinite number of

hyperplanes for separating the observations. SVM's goal is to find the optimal hyperplane that

separates data with maximizing the distance between the two classes and that goes middle of the

two points classes of examples. The nearest points, which are used only for the determination

of the hyperplane, are called support vectors. Among the models of SVM, there is linear-SVM

and nonlinear-SVM. The first are the simplest SVM because they can linearly separate data,

whereas the second ones are used for data that are not linearly separable. In the last case the

data are transformed to be represented in a large space where they are linearly separable.

The evaluation of classification techniques is a recurrent problem which often depends on

the difficult task of measuring generalization performance, that is, the performance on new,

previously unseen data. For most real-world problems we can only estimate the generalization

performance. To evaluate a certain learning algorithm, we usually apply a cross-validation

scheme.

X

2

X

2

X

1

X

1

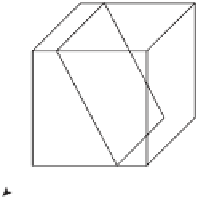

(a)

(b)

Figure 5.23

(a) Non-linearly separable data samples represented in a plane and separated by a curved

line; (b) plan separation after a transformation of of the same data samples into a 3D space