Graphics Reference

In-Depth Information

(a)

(c)

(b)

Figure 5.15

BU-3DFE database: depth images derived from the 3D face scans of subject. The highest

level of intensity is reported for expressions

anger

,

disgust

, and

fear

. Copyright

C

2011, Springer

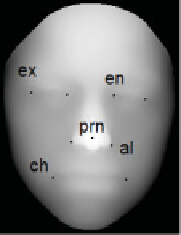

Then the approach starts from the consideration that just a few fiducial points of the face

can be automatically identified with sufficient robustness across different individuals and

ethnicities. This is supported by recent studies as that in Gupta et al. (2010), where methods

are given to automatically identify 10 facial fiducial points on the 3D face scans of the

Texas 3D Face Recognition Database

. Following this idea, a general method to automatically

identify nine keypoints of the face is given. The points are the tip of the nose (

pronasale, prn

),

the two points that define the nose width (

alare, al

), the points at the

inner

and

outer

eyes

(

endocanthion, en

and

exocanthion, ex

, respectively), and the outer mouth points (

cheilion,

ch

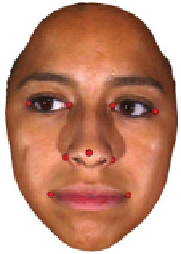

), as evidenced on the 3D face scan and depth image of Figures 5.16

a,b

.

Different algorithms are used in order to detect the keypoints.

For the nose tip and the two alare points, the proposed solution develops on the methods

given in Gupta et al. (2010). In particular, the nose tip is determined in the convex part of

the central region of the face as the point with a local maximum of the elliptic Gaussian

curvature, which is closest to the initial estimate of the nose tip. For the alare points, first

the edges of the facial depth images are identified using a

Laplacian of Gaussian

(LoG)

•

(a)

(b)

Figure 5.16

BU-3DFE database: The 9 facial keypoints that are automatically detected. Points are

evidenced on a textured 3D face scan and on the depth image of the scan in (a). Copyright

C

2011,

Springer