Graphics Reference

In-Depth Information

w1

b

p1

w2

n

Y

p2

Σ

F

w3

p3

(a)

a1

w12

w11

W21

y2

w22

w21

f

2

F

2

a2

W22

w32

W11

y1

w31

a3

F

1

f

1

W12

w41

w42

a4

(b)

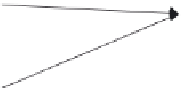

FIGURE 4.1

(a) Perceptron neuron. (b) Multi-layer perceptron network (MLP).

for neural networks such as the back-propagation [40] and Levenberg-Marquardt [41]

methods were developed to compute the weights and biases for inputs.

We adopted neural networks in our research for modelling the rendering pro-

cess because of their ability to capture information from complex, non-linear,

multi-variate systems without the need to assume underlying data distribution or

mathematical models. In recent years, the popularity of using multi-layer percep-

tion networks has increased due to their successes in real-world applications such as

pattern recognition and control applications.

Dynamic neural networks use memory and recurrent feedback connections to

capture temporal patterns in data. Waibel et al. [42] introduced the distributed time

delay neural network (DTDNN) for phoneme recognition. An extension of this

network structure gives the flexibility to have tapped delay line memory at the input

to the first layer of a static feed-forward network and throughout the network as well.

For general discussion, a two-layer DTDNN is presented in Figure 4.2.

The choice of using ANNs to model a computing process such as real-time

rendering may be explained easily. First, the dynamics of an ANN arising from delay

units within its structure provides an inferred correspondence with the architecture

of current computing hardware. To illustrate, a delay usually occurs in embedded

circuits when data are transferred between the processor and memory units.

Search WWH ::

Custom Search