Geography Reference

In-Depth Information

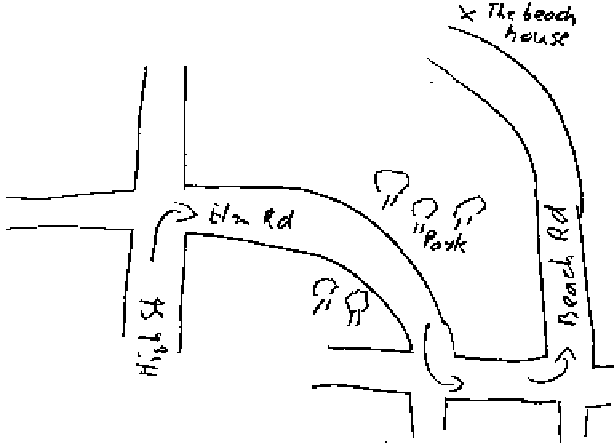

Fig. 6.6

A (fictitious) sketch map

buildings). Language understanding is particularly useful in multimodal settings,

where the system also allows for verbal input. In human-human communication it is

common that communication partners verbally explain their sketch while drawing.

Multimodal input may also help the system in interpreting what is being drawn—

given it has sufficient NLP capabilities. Finally, drawing skills determine how well a

human or computer sketcher can translate their conceptual ideas into a recognizable

drawing.

Visual understanding, i.e., the first processing of the sketched input, may start

with simplifying the line strokes drawn by the user (e.g., by employing the well-

by the user, some may just occur for reasons of motor skill or input resolution.

The next step is to aggregate individual strokes to objects. According to Blaser and

the beginning of a new object, whether it belongs to the current object, or whether

it is an addition to an already existing object. The drawing sequence plays a crucial

role here, i.e., the order in which strokes are produced and pauses made between

strokes. Also, the location of the new stroke relative to other objects needs to be

considered. Boundary zones around objects are used to decide whether a stroke is

part of that object (if start or end point of the stroke fall within this zone) or not (see

Once an object has been identified it is further processed to clean up any missing

links or to remove overshoots, and to determine whether the object represents a

line, a region, or a symbol. For region objects (polygons), edges may be connected

Search WWH ::

Custom Search