Information Technology Reference

In-Depth Information

Intelligent Essay Assessor (Landauer, Foltz, &

Laham, 1998), Intellimetric (Elliot, 2001), a new

version of the Project Essay Grade (PEG, Page,

1994), and e-rater (Burstein et al., 1998).

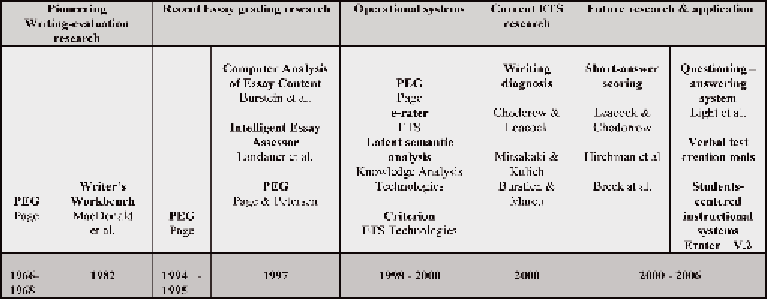

Ellis Page set the stage for automated writing

evaluation (see the timeline in Figure 1). Rec-

ognizing the heavy demand placed on teachers

and large-scale testing programs in evaluating

student essays, Page developed an automated

essay-grading system called Project Essay Grader

(PEG). He started with a set of student essays that

teachers had already graded. He then experimented

with a variety of automatically extractable textual

features and applied multiple linear regressions

to determine an optimal combination of weighted

features that best predicted the teachers' grades.

His system could then score other essays using

the same set of weighted features. In the 1960s,

the kinds of features someone could automatically

extract from text were limited to surface features.

Some of the most predictive features Page found

included average word length, essay length in

words, number of commas, number of preposi-

tions, and number of uncommon words—the latter

being negatively correlated with essay scores.

In the early 1980s, the Writer's Workbench

tool (WWB) set took a first step toward this goal.

WWB was not an essay-scoring system. Instead,

it aimed to provide helpful feedback to writers

about spelling, diction, and readability. In addition

to its spelling program—one of the first spelling

checkers - WWB included a diction program

that automatically flagged commonly misused

and pretentious words, such as regardless and

utilize. It also included programs for computing

some standard readability measures based on

word, syllable, and sentence counts, so in the

process it flagged lengthy sentences as potentially

problematic. Although WWB programs barely

scratched the surface of text, they were a step in

the right direction for the automated analysis of

writing quality.

In February 1999,

E-rater

became fully op-

erational within ETS's Online Scoring Network

for scoring GMAT essays. For low-stakes writing-

evaluation applications, such as a Web-based

practice essay system, a single reading by an au-

tomated system is often acceptable and economi-

cally preferable. The new version of e-rater (V.2)

is different from other automated essay scoring

systems in several important respects. The main

innovations of e-rater V.2 are a small, intuitive,

and meaningful set of features used for scoring;

a single scoring model and standards can be used

across all prompts of an assessment; modeling

procedures that are transparent and flexible, and

can be based entirely on expert judgment.

Figure 2 shows a popular common frame

work of the automated essay grading systems.

Most of the modern systems train the system

Figure 1. A timeline of research developments in writing evaluation

Search WWH ::

Custom Search