Information Technology Reference

In-Depth Information

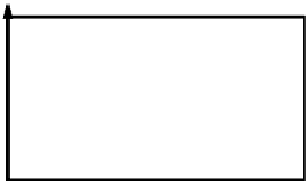

the low bias and the low variance is necessary, as demonstrated in Figure 3.17 on

the example of

polynomial curve fitting

of a set of given data points.

A

B

*

*

*

*

*

*

*

*

*

0

x

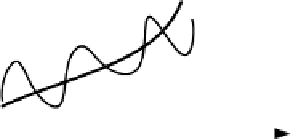

Figure 3.17.

Polynomial curve fitting of data

A polynomial of degree

n

can exactly fit a set of (

n

+ 1) data points, say

training samples. If the degree of the polynomial is lower, then the fitting will not

be exact because the polynomial (as a regression curve

A

) cannot pass through all

data points (Figure 3.17). The fitting will be erroneous and will suffer from

bias

error

, formulated as the minimized value of the mean square error. In the opposite

case, if the degree of the polynomial is higher than the degree required for exact

fitting of the given training data set, the excess number of it's degrees will lead to

oscillations because of missing constraints (curve

B

in Figure 3.17). The

polynomial approximation will, therefore, suffer from

variance error

.

Consequently, a polynomial of the optimal degree should be chosen for data fitting

that will provide a low bias error as well as a low variance error, in order to resolve

the

bias-variance dilemma.

Translated in terms of neural network training, polynomial fitting is seen as an

optimal nonlinear regression problem (German et

al.

, 1992). This means that, in

order to fit a given data set optimally using neural network, we need a

corresponding model implemented as a structured neural network with a number of

interconnected neurons in hidden layer. If the size of the selected network (or the

order of its model) is too low, then the network will not be able to fit the data

optimally and the data fitting will be accompanied by a bias error that will

gradually decrease with increasing network size until it reaches its minimal value.

Increasing the network size beyond this point, the network will also start learning

the noise present in the training data, because there will be more internal

parameters than are required to fit the given data. With this, also the variance error

of the network will increase. The cross-point of the bias and the variance error

curve will guarantee the lowest bias error and the lowest variance error for fitting

the given data set. The corresponding network size (

i.e

. the corresponding number

of neurons) will solve the given data fitting problem optimally. At this point the

network training should be stopped, which is known as

early stopping

or

stopping

with cross-validation

. The network trained in this way will guarantee the

best

generalization

.

For probabilistic consideration of polynomial fitting, the expected value of the

minimum square error across the set of training data

Search WWH ::

Custom Search