Information Technology Reference

In-Depth Information

K

w

S

j

'

w

w

(3.4)

i

w

i

where

K

is a positive learning parameter determining the speed of convergence to

the minimum.

w

0

bias

X

0

= 1

x

1

w

1

x

2

u

j

output

w

2

Summing

Element

f

(

u

j

)

:

:

y

j

x

n

w

n

f'

(

u

j

)

weights

desired

output

-

Learning rate

+

Training

Algorithm

d

j

Summing

Element

Product

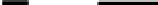

Figure 3.12.

Backpropagation training implementation for a single neuron

Now, taking into account that from (3.3) follows:

,

e

dy

df

u

(3.5)

j

j

j

j

j

where

n

u

¦

x

.

j

i

i

i

0

By applying the chain rule

K

ww

Se

j

j

'

ww

w

(3.6)

i

ew

j

i

to Equation (3.5) we get

w

e

w

e

w

u

j

j

j

'

w

we

K

K

e

(3.7)

i

j

j

w

w

u

w

w

i

j

i

This can further be transformed to

Search WWH ::

Custom Search