Information Technology Reference

In-Depth Information

a)

b)

Letters Pattern: 0

Hidden_Acts Pattern: 0

Y

Y

26.00

26.00

L

Z

K

Y

24.00

24.00

R

X

22.00

22.00

F

V

E

20.00

20.00

N

T

V

18.00

18.00

J

U

Q

16.00

16.00

O

O

14.00

14.00

C

M

S

12.00

12.00

D

K

B

J

10.00

10.00

Y

X

H

8.00

Z

8.00

I

T

F

6.00

6.00

M

D

4.00

4.00

W

B

2.00

Q

2.00

A

S

0.00

0.00

X

X

0.00

5.00

10.00

15.00

20.00

0.00

0.50

1.00

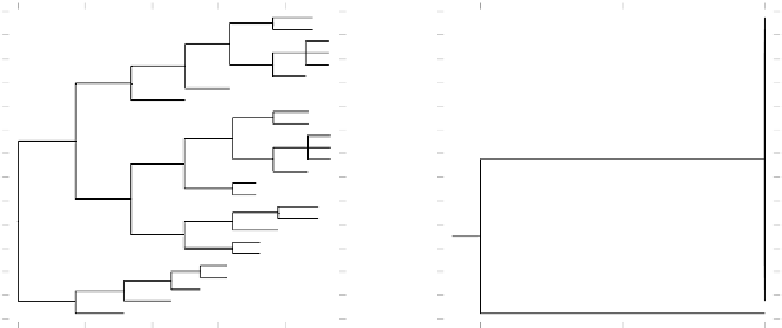

Figure 3.10:

Cluster plots for letters in the digit network.

a)

Shows cluster plot of input letter images.

b)

Shows cluster plot of

hidden layer digit detectors when shown these images. The hidden layer units were not activated by the majority of the letter inputs,

so the representations overlap by 100 percent, meaning that the hidden layer makes no distinction between these patterns.

the hidden unit for the digit “8” responding to the suffi-

ciently similar letter “S”, resulting in a single cluster for

that hidden unit activity). However, even if things are

done to remedy this problem (e.g., lowering the unit's

leak currents so that they respond more easily), many

distinctions remain collapsed due to the fact that the

weights are not specifically tuned for letter patterns, and

thus do not distinguish among them.

In summary, we have seen that collections of detec-

tors operating on the feedforward flow of information

from the input to the hidden layer can transform the

similarity structure of the representations. This process

of transformation is central to all of cognition — when

we categorize a range of different objects with the same

name (e.g., “chair”), or simply recognize an abstract

pattern as the same regardless of the exact location or

size we view this pattern in, we are transforming input

signals to emphasize some distinctions and deempha-

size others. We will see in subsequent chapters that by

chaining together multiple stages (layers) of such trans-

formations (as we expect happens in the cortex based

on the connectivity structure discussed in the previous

section), very powerful and substantial overall transfor-

mations can be produced.

3.3.1

Exploration of Transformations

Now, we will explore the ideas just presented.

Open the project

transform.proj.gz

in

chapter_3

to begin.

You will see a network (the digits network), the

xform_ctrl

overall control panel, and the standard

PDP++ Root window (see appendix A for more infor-

mation).

Let's first examine the network, which looks just like

figure 3.6. It has a 5x7

Input

layer for the digit im-

ages, and a

2x5

Hidden

layer, with each of the 10 hid-

den units representing a digit.

,

!

Select the

r.wt

button (lower left of the window —

you may need to scroll), and then click on each of the

different hidden units.

You will see that the weights exactly match the im-

ages of the digits the units represent, just as our single

8 detector from the previous chapter did.

Although it should be pretty obvious from these

weights how each unit will respond to the set of digit

input patterns that exactly match the weight patterns,

let's explore this nonetheless.

Select the

act

button to view the unit activities in the

network, and hit the

Step

button in the control panel.

Search WWH ::

Custom Search