Information Technology Reference

In-Depth Information

a)

b)

c)

data

freq h h 1 2 3

data

freq h h 1 2 3

data

_

_

_

freq h h 1 2 3

3 0 1 0 0 0

2 0 1 1 0 0

2 0 1 0 1 0

2 0 1 0 0 1

1 0 1 1 1 0

1 0 1 0 1 1

1 0 1 1 0 1

0 0 1 1 1 1

0 1 0 0 0 0

1 1 0 1 0 0

1 1 0 0 1 0

1 1 0 0 0 1

2 1 0 1 1 0

3 0 1 0 0 0

2 0 1 1 0 0

2 0 1 0 1 0

2 0 1 0 0 1

3 0 1 0 0 0

2 0 1 1 0 0

2 0 1 0 1 0

2 0 1 0 0 1

1 0 1 1 1 0

1 0 1 0 1 1

1 0 1 1 0 1

0 0 1 1 1 1

0 1 0 0 0 0

1 1 0 1 0 0

1 1 0 0 1 0

1 1 0 0 0 1

1 0 1 1 1 0

d=1 1 0

1 0 1 0 1 1

1 0 1 1 0 1

0 0 1 1 1 1

0 1 0 0 0 0

1 1 0 1 0 0

1 1 0 0 1 0

1 1 0 0 0 1

p(h=1,d=1 1 0)

= 2/24

P(d=1 1 0)

= 3/24

h=1

2 1 0 1 1 0

2 1 0 0 1 1

d=1 1 0

2 1 0 1 1 0

d=1 1 0

h=1

2 1 0 0 1 1

P(h=1)

= 12/24

2 1 0 0 1 1

2 1 0 1 0 1

2 1 0 1 0 1

2 1 0 1 0 1

3 1 0 1 1 1

3 1 0 1 1 1

3 1 0 1 1 1

24

24

24

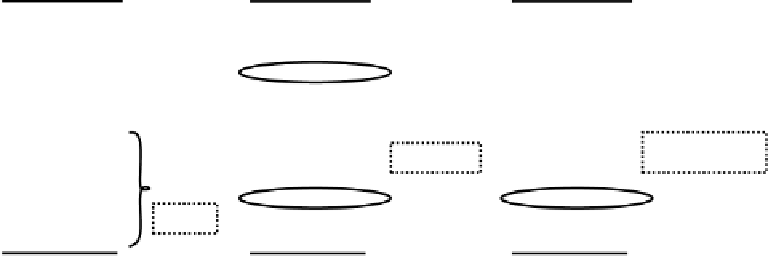

Figure 2.22:

The three relevant probabilities computed from the table:

a)

P (h =1)=12=24 = :5

.

b)

P (d = 110) = 3=24 =

c)

P (h =1;d= 110) = 2=24 = :0833:

Equation 2.23 is the basic equation that we want the

detector to solve, and if we had a table like the one in

figure 2.21, then we have just seen that this equation is

easy to solve. However, we will see in the next section

that having such a table is nearly impossible in the real

world. Thus, in the remainder of this section, we will

do some algebraic manipulations of equation 2.23 that

result in an equation that will be much easier to solve

without a table. We will work through these manipula-

tions now, with the benefit of our table, so that we can

plug in objective probabilities and verify that everything

works. We will then be in a position to jump off into the

world of subjective probabilities.

The key player in our reformulation of equation 2.23

is another kind of conditional probability called the

likelihood

. The likelihood is just the opposite condi-

tional probability from the one in equation 2.23: the

conditional probability of the data given the hypothesis,

In other words, the likelihood simply computes how

well the data fit with the hypothesis. As before, the

basic data for the likelihood come from the same joint

probability of the hypothesis and the data, but they are

scoped in a different way. This time, we scope by all

the cases where the hypothesis was true, and determine

what fraction of this total had the particular input data

state:

(2.26)

which is (2/24) / (12/24) or .167. Thus, one would ex-

pect to receive this data .167 of the time when the hy-

pothesis is true, which tells you how likely it is you

would predict getting this data knowing only that the

hypothesis is true.

The main advantage of a likelihood function is that

we can often compute it directly as a function of the way

our hypothesis is specified, without requiring that we

actually know the joint probability

P (h; d)

(i.e., with-

out requiring a table of all possible events and their fre-

quencies). This makes sense if you again think in terms

of predicting data — once you have a well-specified

hypothesis, you should in principle be able to predict

how likely any given set of data would be under that

hypothesis (i.e., assuming the hypothesis is true). We

will see more how this works in the case of the neuron-

as-detector in the next section.

(2.25)

It is a little bit strange to think about computing the

probability of the

data

, which is, after all, just what was

given to you by your inputs (or your experiment), based

on your hypothesis, which is the thing you aren't so sure

about! However, it all makes perfect sense if instead

you think about how likely you would have

predicted

the data based on the assumptions of your hypothesis.

Search WWH ::

Custom Search