Information Technology Reference

In-Depth Information

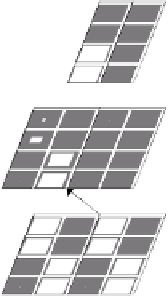

Dim A Dim B

fact that there are only two such units, one for each di-

mension, with each unit fully connected with the feature

units in the hidden layer from the corresponding dimen-

sion.

During initial learning, the network has no difficulty

activating the target item representation, because all

hidden units are roughly equally likely to get activated,

and the correct item will get reinforced through learn-

ing. However, if the target is then switched to one that

was previously irrelevant (i.e., a reversal), then the ir-

relevant item will not tend to be activated in the hidden

layer, making it difficult to learn the new association.

The top-down PFC biasing can overcome this problem

by supporting the activation of the new target item, giv-

ing it an edge in the kWTA competition.

The AC layer is the adaptive critic for the temporal

differences reward-based learning mechanism, which

controls the dopamine-based gating of PFC represen-

tations and is thus critical for the trial-and-error search

process. In this model, there is no temporal gap be-

tween the stimulus, response, and subsequent reward

or lack thereof, but the AC still plays a critical role

by learning to expect reward when the network is per-

forming correctly, and having these expectations dis-

confirmed when the rule changes and the network starts

performing incorrectly. By learning to expect rewards

during correct behavior, the AC ends up stabilizing the

PFC representations by not delivering an updating gat-

ing signal. When these expectations are violated, the

AC destabilizes the PFC and facilitates the switching to

a new representation.

More specifically, three patterns of AC unit activa-

tion - increasing, constant, and decreasing - affect the

PFC units in different ways. If the network has been

making errors and then makes a correct response, the

AC unit transitions from near-zero to near-1 level acti-

vation, resulting in a positive TD

Æ

value (computed as

the difference in minus and plus phase AC activation,

and indicating a change in expected reward), and thus

in a transient strengthening of the gain on the weights

from the hidden units to the PFC. This causes the PFC

to encode the current pattern of hidden activity. If the

network is consistently making errors or consistently

performing correctly (and expecting this performance),

then there is no change in the AC activation, and the

PFC_Feat

Dim A Dim B

PFC_Dim

Hidden

AC

Dim A

Dim B

Input

Output

LR L R

L

R

Figure 11.12:

Dynamic categorization network: The input

display contains two stimuli - one on the left and one on the

right - and the network must choose one. The two stimuli dif-

fer along two dimensions (Dim

A

and

B

). Individual features

within a dimension are composed of 2 active units within a

column. The Input and Hidden layers encode these features

separately for the left (L) and right (R) side display. Response

(Output) is generated by learned associations from the Hidden

layer. Because there are a large number of features in the in-

put, the hidden layer cannot represent all of them at the same

time. Learning in the hidden layer ensures that relevant fea-

tures are active, and irrelevant ones are therefore suppressed.

This suppression impairs learning when the irrelevant features

become relevant. The prefrontal cortex (PFC) layers (repre-

senting feature-level and dimension-level information) help

by more rapidly focusing hidden layer processing on previ-

ously irrelevant features.

The prefrontal cortex (PFC) areas are

PFC_Feat

,

corresponding to orbital areas that represent featu-

ral information, and

PFC_Dim

, which corresponds

to the dorsolateral areas that represent more abstract

dimension-level information. These areas are recipro-

cally interconnected with the hidden units, and their ac-

tivity thus biases the hidden units. The featural nature

of the

PFC_Feat

representations is accomplished by

having the individual PFC units connect in a one-to-one

fashion with the featural units within the 2 dimensions.

We only included one set of 4 such featural units per

dimension because this area does not encode the loca-

tion of the features, only their identity. The dimensional

nature of the

PFC_Dim

representations comes from the

Search WWH ::

Custom Search