Information Technology Reference

In-Depth Information

Gestalt

Gestalt Context

(subject) and the teacher (co-agent). This syntactic in-

formation is conveyed by the cues of word order and

function words

like

with

.

Importantly, the surface form sentences are always

partial and sometimes vague representations of the ac-

tual underlying events. The partial nature of the sen-

tences is produced by only specifying at most one

(and sometimes none) of the modifying features of the

event. The vagueness comes from using vague alterna-

tive words such as:

someone, adult, child, something,

food, utensil,

and

place

. To continue the busdriver eat-

ing example, some sentences might be, “The busdriver

ate something,” or “Someone ate steak in the kitchen,”

and so on. As a result of the active/passive variation and

the different forms of partiality and vagueness in the

sentences, there are a large number of different surface

forms corresponding to each event, such that there are

8,668 total different sentences that could be presented

to the network.

Finally, we note that the determiners (

the, a,

etc.)

were not presented to the network, which simplifies the

syntactic processing somewhat.

Encode

Decode

Input

Role

Filler

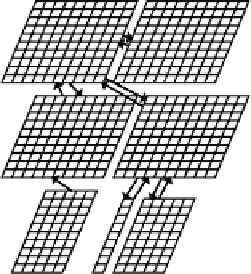

Figure 10.27:

The SG model. Localist word inputs are pre-

sented in the Input layer, encoded in a learned distributed rep-

resentation in the Encode layer, and then integrated together

over time in the Gestalt and Gestalt Context layers. Questions

regarding the sentence are posed by activating a Role unit, and

the network answers by Decoding its Gestalt representation to

produce the appropriate Filler for that role.

questions are posed to the network by activating one

of the 9 different

Role

units (

agent, action, patient,

instrument, co-agent, co-patient, location, adverb, and

recipient

), and requiring the network to produce the ap-

propriate answer in the

Filler

layer. Importantly,

the filler units represent the underlying specific con-

cepts that can sometimes be represented by ambiguous

or vague words. Thus, there is a separate filler unit for

bat (animal)

and

bat (baseball)

, and so on for the other

ambiguous words. The

Decode

hidden layer facilitates

the decoding of this information from the gestalt repre-

sentation.

The role/filler questions are asked of the network af-

ter each input, and only questions that the network could

answer based on what it has heard so far are asked. Note

that this differs from the original SG model, where all

questions were asked after each input, presumably to

encourage the network to anticipate future information.

However, even though we are not specifically forcing

it to do so, the network nevertheless anticipates future

information automatically. There are three main rea-

sons for only asking answerable questions during train-

ing. First, it just seems more plausible — one could

imagine asking oneself questions about the roles of the

Network Structure and Training

Having specified the semantics and syntax of the lin-

guistic environment, we now turn to the network (fig-

ure 10.27) and how the sentences were presented. Each

word in a sentence is presented sequentially across trials

in the

Input

layer, which has a localist representation

of the words (one unit per word). The

Encode

layer

allows the network to develop a distributed encoding of

these words, much as in the family trees network dis-

cussed in chapter 6. The subsequent

Gestalt

hidden

layer serves as the primary gestalt representation, which

is maintained and updated over time through the use of

the

Gestalt Context

layer. This context layer is

a standard SRN context layer that copies the previous

activation state from the gestalt hidden layer after each

trial.

The network is trained by asking it questions about

the information it “reads” in the input sentences.

Specifically, the network is required to answer ques-

tions of the form “who is the agent?,”“who/what is the

patient?,”“where is the location?,” and so on.

These

Search WWH ::

Custom Search