Information Technology Reference

In-Depth Information

graphs in the text multiple times.

The development of effective semantic representa-

tions in the network depends on the fact that whenever

two similar input patterns are presented, similar hidden

units will likely be activated. These hidden layer repre-

sentations should thus encode any reliable correlations

across subsets of words. Furthermore, the network will

even learn about two words that have similar meanings,

but that do not happen to appear together in the same

paragraph. Because these words are likely to co-occur

with similar other words, both will activate a common

subset of hidden units. These hidden units will learn

a similar (high) conditional probability for both words.

Thus, when each of these words is presented alone to

the network, they will produce similar hidden activation

patterns, indicating that they are semantically related.

To keep the network a manageable size, we filtered

the text before presenting it to the network, thereby

reducing the total number of words represented in

the input layer. Two different types of filtering were

performed, eliminating the least informative words:

high-frequency noncontent words and low frequency

words. The high-frequency noncontent words primar-

ily have a syntactic role, such as determiners (e.g.,

“the,”“a,”“an”), conjunctions (“and,”“or,” etc.), pro-

nouns and their possessives (“it,”“his,” etc.), prepo-

sitions (“above,”“about,” etc.), and qualifiers (“may,”

“can,” etc.). We also filtered transition words like

“however” and “because,” high-frequency verbs (“is,”

“have,”“do,”“make,”“use,” and “give”), and number

words (“one,”“two,” etc.). The full list of filtered words

can be found in the file

sem_filter.lg.list

(the

“lg” designates that this is a relatively large list of high-

frequency words to filter) in the

chapter_10

direc-

tory where the simulations are.

The low-frequency filtering removed any word that

occurred five or fewer times in the entire text. Repe-

titions of a word within a single paragraph contributed

only once to this frequency measure, because only the

single word unit can be active in the network even if

it occurs multiple times within a paragraph. The fre-

quencies of the words in this text are listed in the file

eccn_lg_f5.freq

, and a summary count of the

number of words at the lower frequencies (1-10) is

given in the file

eccn_lg_f5.freq_cnt

. The ac-

Hidden

Input

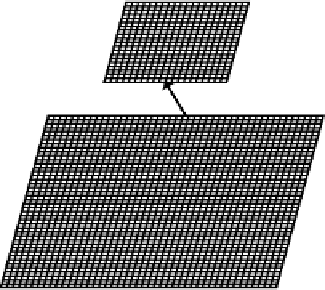

Figure 10.24:

Semantic network, with the input having one

unit per word. For training, all the words in a paragraph of

text are activated, and CPCA Hebbian learning takes place on

the resulting hidden layer activations.

competitive activation dynamics in the network can per-

form multiple constraint satisfaction to settle on a pat-

tern of activity that captures the meaning of arbitrary

groups of words, including interactions between words

that give rise to different shades of meaning. Thus, the

combinatorics of the distributed representation, with the

appropriate dynamics, allow a huge and complex space

of semantic information to be efficiently represented.

10.6.1

Basic Properties of the Model

The model has an input layer with one unit per word

(1920 words total), that projects to a hidden layer con-

taining 400 units (figure 10.24). On a given training

trial, all of the units representing the words present in

one individual paragraph are activated, and the network

settles on a hidden activation pattern as a function of

this input. If two words reliably appear together within

a paragraph, they will be semantically linked by the

network. It is also possible to consider smaller group-

ing units (e.g., sentences) and larger ones (passages),

but paragraph-level grouping has proven effective in the

LSA models. After the network settles on one para-

graph's worth of words, CPCA Hebbian learning takes

place, encoding the conditional probability that the in-

put units are active given that the hidden units are. This

process is repeated by cycling through all the para-

Search WWH ::

Custom Search