Information Technology Reference

In-Depth Information

orthography to phonology mapping. Although the suc-

cess of this model provides a useful demonstration of

the importance of appropriate representations, the fact

that these representations had to be specially designed

by the researchers and not learned by the network it-

self remains a problem. In the model we present here,

we avoid this problem by letting the network discover

appropriate representations on its own. This model is

based on the ideas developed in the invariant object

recognition model from chapter 8.

From the perspective of the object recognition model,

we can see that the SM89 and PMSP models confront

the same tradeoff between position invariant represen-

tations that recognize objects/features in multiple loca-

tions (as emphasized in the PMSP model) and conjunc-

tive representations that capture relations among the in-

puts (as accomplished by the wickelfeatures in SM89

and the conjunctive units in PMSP). Given the satisfy-

ing resolution of this tradeoff in the object recognition

model through the development of a hierarchy of repre-

sentations that produce both increasingly invariant and

increasingly more featurally complex representations,

one might expect the same approach to be applicable to

word reading as well. As alluded to above, visual word

recognition is also just a special case of object recogni-

tion, using the same neural pathways and thus the same

basic mechanisms (e.g., Farah, 1992). Thus, we next

explore a reading model based on the object recogni-

tion model.

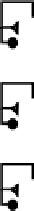

phonemes

hidden

o

wor

rds

r

d

w

words...words

Figure 10.13:

Basic structure of the orthography to phonol-

ogy reading model. The orthographic input has representa-

tions of words appearing at all possible locations across a 7

letter position input. The next hidden layer receives from 3 of

these slots, and forms locally invariant but also somewhat con-

junctive representations. The next hidden layer uses these rep-

resentations to map into our standard 7 slot, vowel-centered,

repeating consonant phonological representation at the output.

These position changes are analogous to the translating

input patterns in the object recognition model, though

the number of different positions in the current model

is much smaller (to keep the overall size of the model

manageable).

The next layer up from the input, the

Ortho_Code

layer, is like the V2 layer in the object recognition

model — it should develop locally invariant (e.g., en-

coding a given letter in any of the 3 slots) but also con-

junctive representations of the letter inputs. In other

words, these units should develop something like the

hand-tuned PMSP input representations. This layer has

5 subgroups of units each with a sliding window onto 3

out of the 7 letter slots. The next hidden layer, which

is fully connected to both the first hidden layer and

the phonological output, then performs the mapping be-

tween orthography and phonology. The phonological

representation is our standard 7 slot, vowel-centered,

repeating consonant representation described in sec-

tion 10.2.2.

We used the PMSP corpus of nearly 3,000 mono-

syllabic words to train this network, with each word

presented according to the square root of its actual fre-

10.4.1

Basic Properties of the Model

The basic structure of the model is illustrated in fig-

ure 10.13. The orthographic input is presented as a

string of contiguous letter activities across 7 letter slots.

Although distinct letter-level input representations are

used for convenience, these could be any kind of vi-

sual features associated with letters. Within each slot,

there are 26 units each representing a different letter

plus an additional “space” unit.

1

Words were presented

in all of the positions that allowed the entire word to fit

(without wraparound). For example, the word

think

was presented starting

at the first, second, or third slot.

1

This was not used in this simulation, but is present for generality

and was used in other related simulations.

Search WWH ::

Custom Search