Information Technology Reference

In-Depth Information

The grid log shows the activity of the input and hid-

den units during the first event (input is present) and the

second event (input is removed). You should see that

when the two features are active in the input, this acti-

vates the appropriate hidden units corresponding to the

distributed representation of

television

.However,when

the input is subsequently removed, the activation does

not remain concentrated in the two features, but spreads

to the other feature (figure 9.19). Thus, it is impossible

to determine which item was originally present. This

spread occurs because all the units are interconnected.

Perhaps the problem is that the weights are all exactly

the same for all the connections, which is not likely to

be true in the brain.

TV

Synth

Terminal

Hidden2

Monitor

Speakers

Keyboard

Hidden

Input

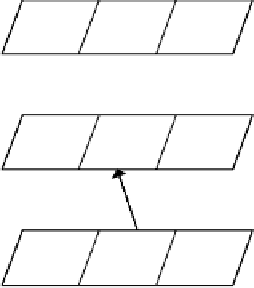

Figure 9.20:

Network for exploring active maintenance, with

higher-order units in

Hidden2

that create mutually reinforc-

ing attractors.

Set the

wt_mean

parameter in the control panel to .5

(to make room for more variance) and then try a range of

wt_var

values (e.g., .1, .25, .4). Be sure to do multiple

runs with each variance level.

You can see that this network has an additional hid-

den layer with the three higher-order units correspond-

ing to the different pairings of features (figure 9.20).

Question 9.9

Describe what happened as you in-

creased the amount of variance. Were you able to

achieve reliable maintenance of the input pattern?

First hit

Defaults

to restore the original weight

parameters, and then do a

Run

with this network.

You should observe that indeed it is capable of main-

taining the information without spread. Thus, to the ex-

tent that the network can develop distributed represen-

tations that have these kinds of higher-order constraints

in them, one might be able to achieve active mainte-

nance without spread. Indeed, given the multilayered

nature of the cortex (see chapter 3), it is likely that dis-

tributed representations will have these kinds of higher-

order constraints.

To this point, we have neglected a very important

property of the brain —

noise

. All of the ongoing activ-

ity in the brain, together with the somewhat random tim-

ing of individual spikes of activation, produces a back-

ground of noise that we have not included in this sim-

ulation. Although we generally assume this noise to be

present and have specifically introduced it when neces-

sary, we have not included it in most simulations be-

cause it slows everything down and typically does not

significantly change the basic behavior of the models.

However, it is essential to take noise into account in

the context of active maintenance because noise tends to

accumulate over time and degrade the quality of main-

The activation spread in this network occurs because

the units do not mutually reinforce a particular activa-

tion state (i.e., there is no attractor) — each unit partici-

pates in multiple distributed patterns, and thus supports

each of these different patterns equally. Although dis-

tributed representations are defined by this property of

units participating in multiple representations, this net-

work represents an extreme case. To make attractors in

this network, we can introduce

higher-order

represen-

tations within the distributed patterns of connectivity.

A higher-order representation in the environment we

have been exploring would be something like a

televi-

sion

unit that is interconnected with the

monitor

and

speakers

features. It is higher-order because it joins to-

gether these two lower-level features and indicates that

they go together. Thus, when

monitor

and

speakers

are active, they will preferentially activate

television

,

which will in turn preferentially activate these two fea-

ture units. This will form a mutually reinforcing attrac-

tor that should be capable of active maintenance.

To test out this idea, set

net_type

to

HIGHER_ORDER

instead of

DISTRIBUTED

, and

Apply

.

A new network window will appear.

Search WWH ::

Custom Search