Information Technology Reference

In-Depth Information

jects. This happens by stopping settling whenever the

target

output (object 2) gets above an activity of .6 (if

this doesn't happen, settling stops after 200 cycles).

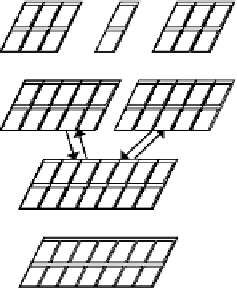

The spatial processing pathway has a sequence of two

layers of spatial representations, differing in the level of

spatial resolution. As in the object pathway, each unit

in the spatial pathway represents 3 adjacent spatial lo-

cations, but unlike the object pathway, these units are

not sensitive to particular features. Two units per lo-

cation provide distributed representations in both layers

of the spatial pathway. This redundancy will be useful

for demonstrating the effects of partial damage to this

pathway.

Spat2

Output

Obj2

Spat1

Obj1

V1

Object 2 (Target)

Object 1 (Cue)

Input

Figure 8.25:

The simple spatial attention model.

Locate the

attn_ctrl

overall control panel.

This control panel contains a number of important pa-

rameters, most of which are

wt_scale

values that de-

termine the relative strength of various pathways within

the network (and all other pathways have a default

strength of 1). As discussed in chapter 2, the connec-

tion strengths can be uniformly scaled by a normalized

multiplicative factor, called

wt_scale

in the simula-

tor. We set these weight scale parameters to determine

the relative influence of one pathway on the other, and

the balance of bottom-up (stimulus driven) versus top-

down (attentional) processing.

As emphasized in figure 8.23, the spatial pathway in-

fluences the object pathway relatively strongly (as de-

termined by the

spat_obj

parameter with a value of

2), whereas the object pathway influences the spatial

pathway with a strength of .5. Also, the spatial sys-

tem will be responsive to bottom-up inputs from V1

because the

v1_spat

parameter, with a value of 2,

makes the

V1

to

Spat1

connections relatively strong.

This strength allows for effective shifting of attention

(as also emphasized in figure 8.23). Finally, the other

two parameters in the control panel show that we are

using relatively slow settling, and adding some noise

into the processing to simulate subject performance.

8.5.2

Exploring the Simple Attentional Model

Open the project

attn_simple.proj.gz

in

chapter_8

to begin.

Let's step through the network structure and con-

nectivity, which was completely pre-specified (i.e., the

network was not trained, and no learning takes place,

because it was easier to hand-construct this simple ar-

chitecture). As you can see, the network basically

resembles figure 8.23, with mutually interconnected

Spatial

and

Object

pathways feeding off of a V1-

like layer that contains a spatially mapped feature array

(figure 8.25). In this simple case, we're assuming that

each “object” is represented by a single distinct feature

in this array, and also that space is organized along a sin-

gle dimension. Thus, the first row of units represents the

first object's feature (which serves as the cue stimulus)

in each of 7 locations, and the second row represents

the second object's feature (which serves as the target)

in these same 7 locations.

,

!

Now select

r.wt

in the network window and click on

the object and spatial units to see how they function via

their connectivity patterns.

The object processing pathway has a sequence of

3 increasingly spatially invariant layers of representa-

tions, with each unit collapsing over 3 adjacent spatial

locations of the object-defining feature in the layer be-

low. Note that the highest, fully spatially invariant level

of the object pathway plays the role of the output layer,

and is used for measuring the reaction time to detect ob-

,

!

Perceiving Multiple Objects

Although much of the detailed behavioral data we will

explore with the model concerns the Posner spatial cue-

ing task, we think the more basic functional motiva-

tion for visual attention is to facilitate object recog-

Search WWH ::

Custom Search