Information Technology Reference

In-Depth Information

of adapting weights going from the context to the hid-

den layer that can adapt to a fixed (or slowly updating)

transformation of the hidden layer representations by

the context layer. This point will be important for the

possible biological implementation of context.

Although it is not essential that the context layer be

a literal copy of the hidden layer, it is essential that the

context layer be updated in a very controlled manner.

For example, an alternative idea about how to imple-

ment a context layer (one that might seem easier to

imagine the biology implementing using the kinds of

network dynamics described in chapter 3) would be to

just have an additional layer of “free” context units,

presumably recurrently connected amongst themselves

to enable sustained activation over time, that somehow

maintain information about prior states without any spe-

cial copying operation. These context units would in-

stead just communicate with the hidden layer via stan-

dard bidirectional connections. However, there are a

couple of problems with this scenario.

First, there is a basic tradeoff for these context units.

They must preserve information about the prior hidden

state as the hidden units settle into a new state with a

new input, but then they must update their representa-

tions to encode the new hidden state. Thus, these units

need to be alternately both stable and updatable, which

is not something that generic activation functions do

very well, necessitating a special context layer that is

updated in a controlled manner rather than “free” con-

text units. Second, even if free context units could strike

a balance between stability and updating through their

continuous activation trajectory over settling, the error-

driven learning procedure (GeneRec) would be limited

because it does not take into account this activation tra-

jectory; instead, learning is based on the final activation

states. Controlled updating of the context units allows

both preserved and updated representations to govern

learning. For these reasons, the free context representa-

tion does not work, as simulations easily demonstrate.

For most of our simulations, we use a simple copy-

ing operation to update the context representations. The

equation for the update of a context unit

cj

is:

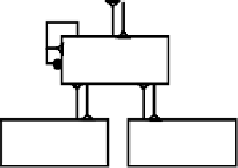

Output

Hidden

(copy)

Input

Context

Figure 6.12:

Simple recurrent network (SRN), where the

context layer is a copy of the hidden layer activations from

the previous time step.

networks. The category that includes both models is

known as a

simple recurrent network

or

SRN

,which

is the terminology that we will use. The context repre-

sentation in an SRN is contained in a special layer that

acts just like another input layer, except that its activity

state is set to be a copy of the prior hidden or output unit

activity states for the Elman and Jordan nets, respec-

tively (figure 6.12). We prefer the hidden state-based

context layer because it gives the network considerable

flexibility in choosing the contents of the context rep-

resentations, by learning representations in the hidden

layer. This flexibility can partially overcome the essen-

tially Markovian copying procedure.

We adopt the basic SRN idea as our main way of

dealing with sequential tasks. Before exploring the ba-

sic SRN, a couple of issues must be addressed. First,

the nature of the context layer representations and their

updating must be further clarified from a computational

perspective. Then we need to examine the biological

plausibility of such representations. We then explore a

simulation of the basic SRN.

6.6.1

Computational Considerations for Context

Representations

In the standard conception of the SRN, the context layer

is a literal copy of the hidden layer. Although this is

computationally convenient, it is not necessary for the

basic function performed by this layer. Instead, the

context layer can be any information-preserving trans-

formation of the hidden layer, because there is a set

(6.2)

(

t

)=

fm

hid

(

t

1) +

fm

prv

(

t

1)

where the parameter

fm

hid

(meaning “from hidden,” a

Search WWH ::

Custom Search