Information Technology Reference

In-Depth Information

a)

b)

Family Trees Initial

Family Trees Trained

Y

Y

24.00

24.00

Rob

Vicky

Penny

Marge

22.00

22.00

Christi

Chuck

Maria

James

20.00

20.00

Chuck

Art

Christo

Jenn

18.00

18.00

Pierro

Charlot

Angela

Colin

16.00

16.00

Art

Christi

Marge

Andy

14.00

14.00

James

Lucia

Vicky

Penny

12.00

12.00

Marco

Christo

Gina

Sophia

10.00

10.00

Francy

Alf

Lucia

Emilio

8.00

8.00

Alf

Tomaso

Tomaso

Gina

6.00

6.00

Andy

Angela

Sophia

Marco

4.00

4.00

Emilio

Francy

Charlot

Pierro

2.00

2.00

Jenn

Maria

Colin

Rob

0.00

0.00

X

X

0.00

10.00

20.00

0.00

5.00

10.00

15.00

20.00

25.00

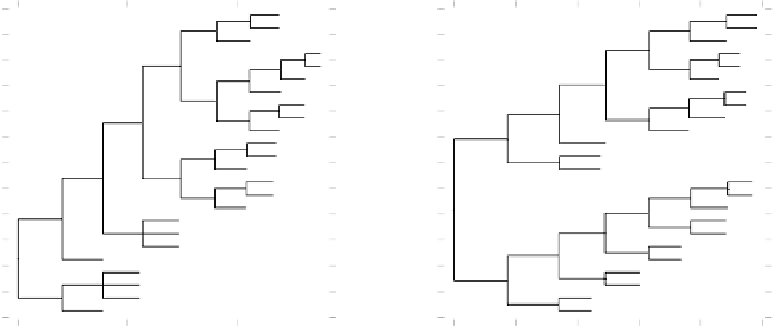

Figure 6.11:

Cluster plot of hidden unit representations:

a)

Prior to learning.

b)

After learning to criterion using combined

Hebbian and error-driven learning. The trained network has two branches corresponding to the two different families, and clusters

within organized generally according to generation.

Go to the

PDP++Root

window. To continue on to

the next simulation, close this project first by selecting

.projects/Remove/Project_0

. Or, if you wish to

stop now, quit by selecting

Object/Quit

.

ing breakfast, driving to work). Indeed, it would seem

that sequential tasks of one sort or another are the norm,

not the exception.

We consider three categories of temporal depen-

dency:

sequential

,

temporally delayed

,and

continuous

trajectories

.Inthe

sequential

case, there is a sequence

of discrete events, with some structure (

grammar

)tothe

sequence that can be learned. This case is concerned

with just the

order

of events, not their detailed timing.

In the

temporally delayed

case, there is a delay be-

tween

antecedent

events and their

outcomes

,andthe

challenge is to learn causal relationships despite these

delays. For example, one often sees lightning several

seconds before the corresponding thunder is heard (or

smoke before the fire, etc.). The rewards of one's labors,

for another example, are often slow in coming (e.g.,

the benefits of a college degree, or the payoff from a

financial investment). One important type of learning

that has been applied to temporally delayed problems is

called

reinforcement learning

, because it is based on

the idea that temporally delayed reinforcement can be

propagated backward in time to update the association

between earlier antecedent states and their likelihood of

causing subsequent reinforcement.

,

!

6.5

Sequence and Temporally Delayed Learning

[Note: The remaining sections in the chapter are re-

quired for only a subset of the models presented in the

second part of the topic, so it is possible to skip them

for the time being, returning later as necessary to un-

derstand the specific models that make use of these ad-

ditional learning mechanisms.]

So far, we have only considered relatively static, dis-

crete kinds of tasks, where a given output pattern (re-

sponse, expectation, etc.) depends only on the given

input pattern. However, many real-world tasks have de-

pendencies that extend over time. An obvious example

is language, where the meaning of this sentence, for ex-

ample, depends on the sequence of words within the

sentence. In spoken language, the words themselves

are constructed from a temporally extended sequence

of distinct sound patterns or

phonemes

. There are many

other examples, including most daily tasks (e.g., mak-

Search WWH ::

Custom Search