Information Technology Reference

In-Depth Information

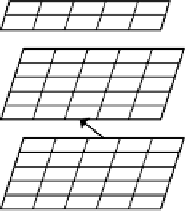

H0

H1

H2

H3

H4

act in a positive way to produce better overall learn-

ing. In the next section, we will see that although bidi-

rectional connectivity can sometimes cause problems,

for example with generalization, these problems can be

remedied by using the other principles (especially Heb-

bian learning and inhibitory competition). The subse-

quent section explores the interactions of error-driven

and Hebbian learning in deep networks. The explo-

rations of cognitive phenomena in the second part of

the topic build upon these basic foundations, and pro-

vide a richer understanding of the various implications

of these core principles.

V0

V1

V2

V3

V4

Output

Hidden

Input

Figure 6.3:

Network for the model-and-task exploration.

The output units are trained to represent the existence of a line

of a particular orientation in a given location, as indicated by

the labels (e.g., H0 means horizontal in position 0 (bottom)).

6.3

Generalization in Bidirectional Networks

One benefit of combining task and model learning in

bidirectionally connected networks comes in

general-

ization

. Generalization is the ability to treat novel items

systematically based on prior learning involving similar

items. For example, people can pronounce the nonword

“nust,” which they've presumably never heard before,

by analogy with familiar words like “must,” “nun,” and

so on. This ability is central to an important function

of the cortex — encoding the structure of the world so

that an organism can act appropriately in novel situa-

tions. Generalization has played an important role in the

debate about the extent to which neural networks can

capture the regularities of the linguistic environment,

as we will discuss in chapter 10. Generalization has

also been a major focus in the study of machine learn-

ing (e.g., Weigend, Rumelhart, & Huberman, 1991;

Wolpert, 1996b, 1996a; Vapnik & Chervonenkis, 1971).

In chapter 3, we briefly discussed how networks typ-

ically generalize in the context of distributed represen-

tations. The central idea is that if a network forms dis-

tributed internal representations that encode the compo-

sitional features of the environment in a combinatorial

fashion, then novel stimuli can be processed success-

fully by activating the appropriate novel combination

of representational (hidden) units. Although the com-

bination is novel, the constituent features are familiar

and have been trained to produce or influence appro-

priate outputs, such that the novel combination of fea-

tures should also produce a reasonable result. In the

domain of reading, a network can pronounce nonwords

correctly because it represents the pronunciation con-

sequences of each letter using different units that can

easily be recombined in novel ways for nonwords (as

we will see in chapter 10).

We will see in the next exploration that the basic

GeneRec network, as derived in chapter 5, does not gen-

eralize very well. Interactive networks like GeneRec

are dynamic systems, with activation dynamics (e.g.,

attractors

as discussed in chapter 3) that can interfere

with the ability to form novel combinatorial represen-

tations. The units interact with each other too much to

retain the kind of independence necessary for novel re-

combination (O'Reilly, in press, 1996b).

A solution to these problems of too much interactiv-

ity is more systematic attractor structures, for example

articulated

or

componential

attractors (Noelle & Cot-

trell, 1996; Plaut & McClelland, 1993). Purely error-

driven GeneRec does not produce such clean, system-

atic representations, and therefore the attractor dynam-

ics significantly interfere with generalization. As dis-

cussed previously, units in an error-driven network tend

to be lazy, and just make the minimal contribution nec-

essary to solve the problem. We will see that the ad-

dition of Hebbian learning and kWTA inhibitory com-

petition improves generalization by placing important

constraints on learning and the development of system-

atic representations.

Search WWH ::

Custom Search