Information Technology Reference

In-Depth Information

Error

that weight (

local

), or the changes depend on more re-

mote signals (

nonlocal

). Although both Hebbian and

GeneRec error-driven learning can be

computed

locally

as a function of activation signals, error-driven learning

really depends on nonlocal error signals to drive weight

changes, while Hebbian learning has no such remote

dependence.

We can understand the superior task-learning perfor-

mance of error-driven learning in terms of this locality

difference. It is precisely because error-driven learning

has this remote dependence on error signals elsewhere

in the network that it is successful at learning tasks,

because weights throughout the network (even in very

early layers as pictured in figure 6.1) can be adjusted to

solve a task that is only manifest in terms of error sig-

nals over a possibly quite distant output layer. Thus, all

of the weights in the network can work together toward

the common goal of solving the task. In contrast, Heb-

bian learning is so local as to be myopic and incapable

of adjusting weights to serve the greater good of correct

task performance — all Hebbian learning cares about is

the local correlational structure over the inputs to a unit.

The locality of Hebbian learning mechanisms has its

own advantages, however. In many situations, Heb-

bian learning can directly and immediately begin to de-

velop useful representations by representing the prin-

cipal correlational structure, without being dependent

on possibly remote error signals that have to filter their

way back through many layers of representations. With

error-driven learning, the problem with all the weights

trying to work together is that they often have a hard

time sorting out who is going to do what, so that there

is too much

interdependency

. This interdependency can

result in very slow learning as these interdependencies

work themselves out, especially in networks with many

hidden layers (e.g., figure 6.1). Also, error-driven units

tend to be somewhat “lazy” and just do whatever little

bit that it takes to solve a problem, and nothing more.

A potentially useful metaphor for the contrast be-

tween error-driven and Hebbian learning comes from

traditional left-wing versus right-wing approaches to

governance. Error-driven learning is like left-wing pol-

itics (e.g., socialism) in that it seeks cooperative, or-

ganized solutions to overall problems, but it can get

bogged down with bureaucracy in trying to ensure that

...

...

...

...

Hebbian

is local

error−driven

is based on

remote errors

...

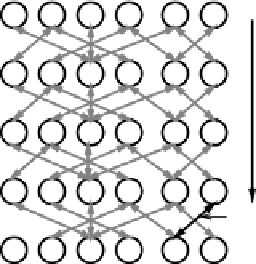

Figure 6.1:

Illustration of the different fates of weights

within a deep (many-layered) network under Hebbian versus

error-driven learning. Hebbian learning is completely local

— it is only a function of local activation correlations. In con-

trast, error-driven learning is ultimately a function of possibly

remote error signals on other layers. This difference explains

many of their relative advantages and disadvantages, as ex-

plained in the text and table 6.1.

Pro

Con

Hebbian

autonomous,

myopic,

(local)

reliable

greedy

Error-driven

task-driven,

co-dependent,

(remote)

cooperative

lazy

Tab le 6 . 1 :

Summary of the pros and cons of Hebbian and

error-driven learning, which can be attributed to the fact that

Hebbian learning operates locally, whereas error-driven learn-

ing depends on remote error signals.

(2) How might these two forms of learning be used in

the cortex? Then, we explore the combined use of Heb-

bian and error-driven learning in two simulations that

highlight the advantages of their combination.

6.2.1

Pros and Cons of Hebbian and Error-Driven

Learning

One can understand a number of the relative advantages

and disadvantages of Hebbian and error-driven learning

in terms of a single underlying property (figure 6.1 and

table 6.1). This property is the

locality

of the learning

algorithms — whether the changes to a given weight

depend only on the immediate activations surrounding

Search WWH ::

Custom Search