Information Technology Reference

In-Depth Information

Second, recirculation showed that this difference in

the

activation

of a hidden unit during plus and minus

phases

(

y

j

y

j

σ (η)

'

σ(η)

h

+

is a good approximation for the dif-

ference in the

net input

to a hidden unit during plus and

minus phases, multiplied by the derivative of the acti-

vation function. By using the difference-of-activation-

states approximation, we implicitly compute the acti-

vation function derivative, which avoids the problem

in backpropagation where the derivative of the activa-

tion function must be explicitly computed. The mathe-

matical derivation of GeneRec begins with the net in-

puts and explicit derivative formulation, because this

can be directly related to backpropagation, and then

applies the difference-of-activation-states approxima-

tion to achieve the final form of the algorithm (equa-

tion 5.32).

Let's first reconsider the equation for the

Æ

j

variable

on the hidden unit in backpropagation (equation 5.30):

+

−

(

−

ηη

σ (η)

)

'

−

h

−

+

ηη

Net input

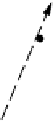

Figure 5.11:

The difference in activation states approximates

the difference in net input states times the derivative of the

activation function:

(

h

j

h

j )

(

j

j )

0

(

j

)

.

term can be multiplied through

(t

k

o

k

)

to get the dif-

ference between the net inputs to the hidden units (from

the output units) in the two phases. Bidirectional con-

nectivity thus allows error information to be communi-

cated in terms of net input to the hidden units, rather

than in terms of

Æ

s propagated backward and multiplied

by the strength of the feedforward synapse.

Next, we can deal with the remaining

hj

(1

hj

)

in

equation 5.34 by applying the difference-of-activation-

states approximation. Recall that

h

j

(1

h

j

)

can be ex-

pressed as

0

(

j

)

, the derivative of the activation func-

tion, so:

Recall the primary aspects of biological implausibility

in this equation: the passing of the error information on

the outputs backward, and the multiplying of this infor-

mation by the feedforward weights and by the derivative

of the activation function.

We avoid the implausible error propagation proce-

dure by converting the computation of error information

multiplied by the weights into a computation of the net

input to the hidden units. For mathematical purposes,

we assume for the moment that our bidirectional con-

nections are symmetric, or

w

jk

= w

kj

. We will see

later that the argument below holds even if we do not

assume exact symmetry. With

w

jk

= w

kj

:

(5.35)

This product can be approximated by just the differ-

ence of the two sigmoidal activation values computed

on these net inputs:

(5.36)

That is, the difference in a hidden unit's activation val-

ues is approximately equivalent to the difference in net

inputs times the slope of the activation function. This is

illustrated in figure 5.11, where it should be clear that

differences in the Y axis are approximately equal to dif-

ferences in the X axis times the slope of the function

that maps X to Y.

As noted previously, this simplification of using dif-

ferences in activation states has one major advantage (in

addition to being somewhat simpler) — it eliminates the

(5.34)

That is,

w

kj

can be substituted for

w

jk

andthenthis

Search WWH ::

Custom Search