Information Technology Reference

In-Depth Information

These activation states are local to the synapse where

the weight changes must occur, and we will see below

how such weight changes can happen in a biological

synapse.

There are a number of important properties of the

GeneRec learning rule. First, GeneRec allows an error

signal occurring anywhere in the network to be used to

drive learning everywhere, which enables many differ-

ent sources of error signals to be used. In addition, this

form of learning is compatible with — and, moreover,

requires — the bidirectional connectivity known to ex-

ist throughout the cortex, and which is responsible for a

number of important computational properties such as

constraint satisfaction, pattern completion, and attrac-

tor dynamics (chapter 3). Furthermore, the subtraction

of activations in GeneRec ends up implicitly computing

the derivative of the activation function, which appears

explicitly in the original backpropagation equations (in

equation 5.24 as

h

j

(1h

j

)

). In addition to a small gain

in biological plausibility, this allows us to use any arbi-

trary activation function (e.g., the point neuron function

with kWTA inhibition) without having to explicitly take

its derivative. Thus, the same GeneRec learning rule

works for virtually any activation function.

The next section presents the derivation of equa-

tion 5.32, and the subsequent section introduces a cou-

ple of simple improvements to this equation.

o

k

. . .

∆

w

jk

=

h

j

−

(t

k

−o

k

)

h

j

. . .

h

+

−

h

j

−

∆

w

ij

=

(

)

s

i

−

s

i

. . .

External Input

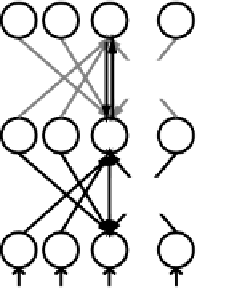

Figure 5.10:

Weight updates computed for the GeneRec al-

gorithm.

phase). In particular, the hidden units need to receive

the top-down activation from both the minus and plus

phase output states to determine their contribution to the

output error, as we will see in a moment.

As usual, we will first discuss the final learning rule

that the GeneRec derivation produces before deriving

the mathematical details. Conveniently, the learning

rule is the same for all units in the network, and is es-

sentially just the delta rule:

(5.32)

for a receiving unit with activation

y

j

and sending

unit with activation

x

i

in the phases as indicated (fig-

ure 5.10). As usual, the rule for adjusting the bias

weights is just the same as for the regular weights, but

with the sending unit activation set to 1:

5.7.1

Derivation of GeneRec

Two important ideas from the recirculation algorithm

allow us to implement backpropagation learning in a

more biologically plausible manner. First, as we dis-

cussed above, recirculation showed that bidirectional

connectivity allows output error to be communicated to

a hidden unit in terms of the difference in its activa-

tion states during the plus and minus activation phases

(5.33)

If you compare equation 5.32 with equation 5.21

from backpropagation (

w

ij

= Æ

j

x

i

), it should be

clear that the phase-based difference in activation states

of the receiving unit

(yj yj

, rather than in terms of the

Æ

's multiplied

by the synaptic weights in the other direction. Bidirec-

tional connectivity thus avoids the problems with back-

propagation of computing error information in terms of

is equivalent to

Æ

in

backpropagation. Thus, the difference between the two

phases of activation states is an indication of the unit's

contribution to the overall error signal. Interestingly,

bidirectional connectivity ends up naturally propagat-

ing both parts of this signal throughout the network, so

that just computing the difference can drive learning.

, sending this information backward from dendrites,

across synapses, and into axons, and multiplying this

information with the strength of that synapse.

Search WWH ::

Custom Search