Information Technology Reference

In-Depth Information

output layer in this case, but it could be another hidden

layer):

a) Minus Phase

b) Plus Phase

External Target

(actual output)

(5.31)

o

k

t

k

. . .

. . .

w

kj

w

jk

w

kj

w

jk

5.6.3

The Biological Implausibility of

Backpropagation

h

j

−

h

+

. . .

. . .

w

ij

w

ij

Unfortunately, despite the apparent simplicity and el-

egance of the backpropagation learning rule, it seems

quite implausible that something like equations 5.21

and 5.24 are computed in the cortex. Perhaps the

biggest problem is equation 5.24, which would require

in biological terms that the

Æ

value be propagated

back-

ward

from the dendrite of the receiving neuron, across

the synapse, into the axon terminal of the sending neu-

ron, down the axon of this neuron, and then integrated

and multiplied by both the strength of that synapse and

some kind of derivative, and then propagated back out

its dendrites, and so on. As if this were not problematic

enough, nobody has ever recorded anything that resem-

bles

Æ

in terms of the electrical or chemical properties

of the neuron.

As we noted in the introduction, many modelers have

chosen to ignore the biological implausibility of back-

propagation, simply because it is so essential for achiev-

ing task learning, as suggested by our explorations of

the difference between Hebbian and delta rule learn-

ing. What we will see in the next section is that we

can rewrite the backpropagation equations so that er-

ror propagation between neurons takes place using stan-

dard activation signals. This approach takes advantage

of the notion of activation phases that we introduced

previously, so that it also ties into the psychological in-

terpretation of the teaching signal as an actual state of

experience that reflects something like an outcome or

corrected response. The result is a very powerful task-

based learning mechanism that need not ignore issues

of biological plausibility.

s

i

s

i

. . .

. . .

External Input

External Input

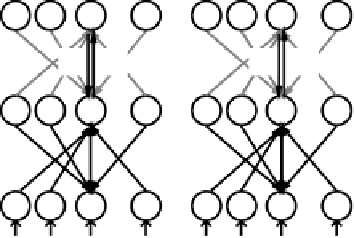

Figure 5.9:

Illustration of the GeneRec algorithm, with bidi-

rectional symmetric connectivity as shown.

a)

In the minus

phase, external input is provided to the input units, and the

network settles, with some record of the resulting minus phase

activation states kept.

b)

In the plus phase, external input (tar-

get) is also applied to the output units in addition to the input

units, and the network again settles.

backpropagation to be implemented in a more biologi-

cally plausible manner. The recirculation algorithm was

subsequently generalized from the somewhat restricted

case it could handle, resulting in the

generalized re-

circulation

algorithm or

GeneRec

(O'Reilly, 1996a),

which serves as our task-based learning algorithm in the

rest of the text.

GeneRec adopts the activation

phases

introduced

previously in our implementation of the delta rule. In

the

minus phase

, the outputs of the network represent

the

expectation

or

response

of the network, as a function

of the standard activation settling process in response to

a given input pattern. Then, in the

plus phase

,theen-

vironment is responsible for providing the

outcome

or

target

output activations (figure 5.9). As before, we use

the

+

superscript to indicate plus-phase variables, and

to indicate minus-phase variables in the equations be-

low.

It is important to emphasize that the full bidirectional

propagation of information (bottom-up and top-down)

occurs during the settling in each of these phases, with

the only difference being whether the output units are

updated from the network (in the minus phase) or are

set to the external outcome/target values (in the plus

5.7

The Generalized Recirculation Algorithm

An algorithm called

recirculation

(Hinton & McClel-

land, 1988) provided two important ideas that enabled

Search WWH ::

Custom Search