Information Technology Reference

In-Depth Information

Output

Event_2

Event_3

Event_0

Event_1

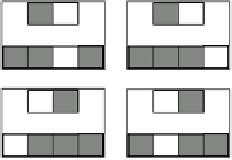

Figure 5.2:

The easy pattern associator task mapping, which

can be thought of as categorizing the first two inputs as “left”

with the left hidden unit, and the next two inputs as “right”

with the right hidden unit.

Input

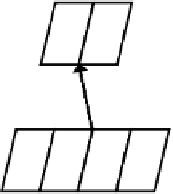

Figure 5.1:

Pattern associator network, where the task is to

learn a mapping between the input and output units.

Open the project

pat_assoc.proj.gz

in

chapter_5

to begin.

You should see in the network window that there

are 2 output units receiving inputs from 4 input units

through a set of feedforward weights (figure 5.1).

just the weights. This kind of learning has also been la-

beled as

supervised

learning, but we will see that there

are a multitude of ecologically valid sources of such

“supervision” that do not require the constant presence

of a omniscient teacher. The multilayer generalization

of the delta rule is called

backpropagation

, which al-

lows errors occurring in a distant layer to be propagated

backwards to earlier layers, enabling the development

of multiple layers of transformations that make the over-

all task easier to solve.

Although the original, mathematically direct mech-

anism for implementing the backpropagation algorithm

is biologically implausible, a formulation that uses bidi-

rectional activation propagation to communicate error

signals, called

GeneRec

, is consistent with known prop-

erties of LTP/D reviewed in the previous chapter, and is

generally quite compatible with the biology of the cor-

tex. It allows error signals occurring anywhere to affect

learning everywhere.

,

!

Locate the

pat_assoc_ctrl

control panel, press

the

View

button, and select

EVENTS

.

As you can see in the environment window that pops

up (figure 5.2), the input-output relationships to be

learned in this “task” are simply that the leftmost two in-

put units should make the left output unit active, while

the rightmost units should make the right output unit

active. This is a relatively easy task to learn because

the left output unit just has to develop strong weights

to these leftmost units and ignore the ones to the right,

while the right output unit does the opposite. Note that

we use kWTA inhibition within the output layer, with a

,

!

parameter of 1.

The network is trained on this task by simply clamp-

ing both the input and output units to their correspond-

ing values from the events in the environment, and per-

forming CPCA Hebbian learning on the resulting acti-

vations.

5.2

Exploration of Hebbian Task Learning

This exploration is based on a very simple form of task

learning, where a set of 4 input units project to 2 out-

put units. The “task” is specified in terms of the rela-

tionships between patterns of activation over the input

units, and the corresponding desired or

target

values of

the output units. This type of network is often called

a

pattern associator

, because the objective is to asso-

ciate patterns of activity on the input with those on the

output.

Iconify

the

network

window,

and

do

View

,

TEST_GRID_LOG

. Then,

press

Step

in the control

,

!

panel 4 times.

You should see all 4 events from the environment pre-

sented in a random order. At the end of this

epoch

of 4

events, you will see the activations updated in a quick

blur — this is the result of the testing phase, which is

run after every epoch of training. During this testing

phase, all 4 events are presented to the network, except

Search WWH ::

Custom Search