Environmental Engineering Reference

In-Depth Information

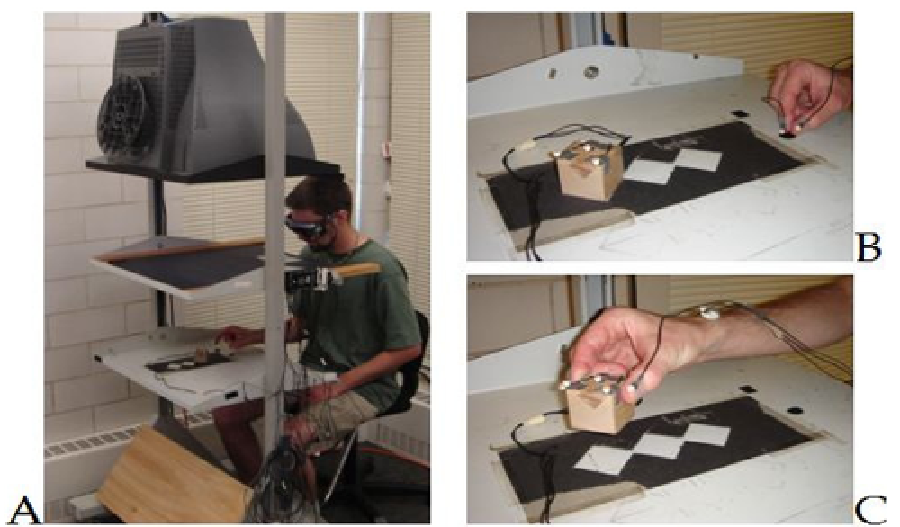

Fig. 1. Wisconsin virtual environment (WiscVE). Panel A shows the apparatus with

downward facing monitor projecting to the mirror. Images are then reflected up to the user

wearing stereoscopic LCD shutter goggles, and thus the images appear at the level of the

actual work surface below. Panels B and C demonstrate a reach to grasp task commonly

utilized in this environment. The hand and physical cube are instrumented with light emitting

diodes (LEDs) that are tracked by the VisualEyez (PTI Phoenix, Inc) system, not shown.

This type of interface gives investigators complete control over the three-dimensional visual

scene (important in generalizeability to natural environments), and makes for maximal use

of the naturalness, dexterity and adaptability of the human hand for the control of computer

mediated tasks (Sturman & Zeltzer, 1993). The use of such a tangible user interface removes

many of the implicit difficulties encountered with standard computer input devices due to

natural aging processes (Smith et al., 1999). The exploitation of these abilities in computer-

generated environments is believed to lead to better overall performance and increased

richness of interaction for a variety of applications (Hendrix & Barfield, 1996; Ishii & Ullmer,

1997; Slater, Usoh, & Steed, 1995). Furthermore, this type of direct-manipulation

environment capitalizes on the user's pre-existing abilities and expectations, as the human

hand provides the most familiar means of interacting with one's environment (Schmidt &

Lee, 1999; Schneiderman, 1983). Such an environment is suitable for applications in

simulation, gaming/entertainment, training, visualization of complex data structures,

rehabilitation and learning (measurement and presentation of data regarding movement

disorders). This allows for ease of translation of our data to marketable applications.

The VE provides a head-coupled, stereoscopic experience to a single user, allowing the user

to grasp and manipulate augmented objects. The system is configured as follows (Figure 1):

3-D motion information (e.g. movement of the subject's hand, head and physical objects

within the environment) is monitored by a VisualEyez (PTI Phoenix, Inc.) motion