Information Technology Reference

In-Depth Information

Fig. 14.5. Some example images from

the Kinect camera. Nearby points are

dark gray, and farther points are light

gray. In the green areas, no infrared data

was captured.

estimation by removing a few big problems. You no longer have to worry

about what is in the background since it is just further away. The color and

texture of clothing, skin and hair are all normalized away. The size of the

person is known, as the depth camera is calibrated in meters.

7

The Xbox team had built an impressive human body-tracking system using

the three-dimensional information but had been unable to make the system

powerful enough for realistic game-playing situations. Shotton described the

problem as follows:

The Xbox group also came to us with a prototype human tracking algorithm

they had developed. It worked by assuming it knew where you were and

how fast you were moving at time t, estimating where you were going to be

at time t + 1, and then refining this prediction by repeatedly comparing a

computer graphics model of the human body at the prediction, to the actual

observed depth image on the camera and making small adjustments. The

results of this system were incredibly impressive: it could smoothly track your

movements in real-time, but it had three limitations. First, you had to stand in

a particular “T”-pose so it could lock on to you initially. Second, if you moved

too unpredictably, it would lose track, and as soon as that happened all bets

were off until you returned to the T-pose. In practice this would typically

happen every five or ten seconds. Third, it only worked well if you had a

similar body size and shape as the programmer who had originally designed it.

Unfortunately, these limitations were all show-stoppers for a possible product.

8

Shotton, with his colleagues Andrew Fitzgibbon and Andrew Blake, brain-

stormed about how they might solve these problems. The researchers knew

that they needed to avoid making the assumption that, given the body posi-

tion or “pose” in the previous video frame just 1/30 of a second ago, one could

find the current body position by trying “nearby” poses. With rapid motions,

this assumption just does not work. What was needed was a detection algo-

rithm for a single three-dimensional image that could take the raw depth mea-

surements and convert them into numbers that represented the body pose.

However, to include all possible combinations of poses, shapes, and sizes the

researchers estimated it would require approximately 10

13

different images.

This number was far too large for any matching process to run in real time on

the Xbox hardware. Shotton had the idea that instead of recognizing entire

natural objects, his team would create an algorithm that recognized the dif-

ferent body parts, such as “left hand” or “right ankle.” The team designed a

pattern of thirty-one different body parts and then used a

decision forest -

a

collection of

decision trees

- as a classification technique to predict the proba-

bility that a given pixel belonged to a specific part of the body (

Fig. 14.6

). By

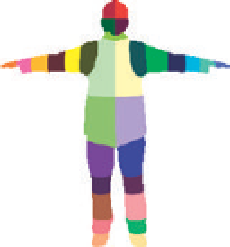

Fig. 14.6. Color-coded pattern of

thirty-one different body parts used by

the body pose algorithm developed by

Microsoft researchers for the Kinect

Xbox application.

Search WWH ::

Custom Search