Information Technology Reference

In-Depth Information

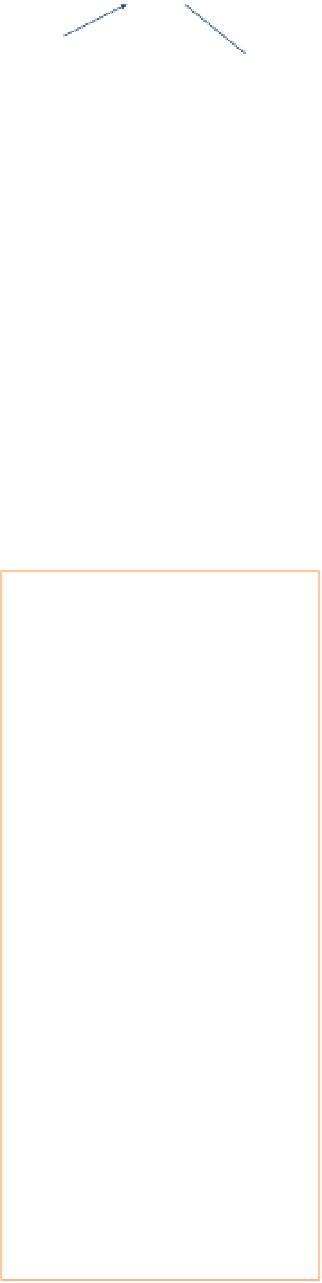

Imagine a simple three-layer neural network: a layer of input neurons,

connected to a second hidden layer of neurons, which in turn is linked to a

layer of output neurons (

Fig. 13.18

). Each neuron converts its inputs into a sin-

gle output, which it transmits to neurons in the next layer. The conversion

process has two stages. First, each incoming signal is multiplied by the weight

of the connection, and then all these weighted inputs are added together to

give a total weighted input. In the second stage, this combined input is passed

through an activation function, such as the function of

Figure 13.17

, to generate

the output signal for the neuron. To train the network to perform a particular

task, we must set the weights on the connections appropriately. The amount of

weight on a connection determines the strength of the influence between the

two neurons. The network is trained by using patterns of activity for the input

neurons together with the desired pattern of activities for the output neurons.

After assigning the initial weights randomly, say to be between -1.0 to +1.0,

then, by calculating the weighted input signals and outputs of the neurons in

each layer of the network, we can determine the strength of the signals at the

output neurons. For each input pattern, we know what pattern we want to

see at the output layer, so we can see how closely our model output matches

the desired output. We now have to adjust each of the weights so that the net-

work produces a closer approximation to our desired output. We do this by

first calculating the error, defined as the square of the difference between the

actual and desired outputs. We want to change the weight of each connection

to reduce this error by an amount that is proportional to the rate at which the

error changes as the weight is altered. We first make such changes for all the

neurons in the output layer. We then repeat the calculation to find the sensitiv-

ity to the weights connecting each layer, working backward layer by layer from

the output to the input. The idea is that each hidden node contributes some

fraction of the error at each of the output nodes to which it is connected. This

type of network is known as a

feed-forward

network because the signals between

the neurons travel in only one direction, from the input nodes, through the

hidden nodes, to the output nodes. The learning algorithm to train the neu-

ral network is called

back propagation

because the error at the output layer is

propagated

- that is, passed along - backward through the hidden layer of the

network (see

Fig. 13.18

).

In the 1990s, researchers found it hard to train neural networks with

more than one hidden layer and two layers of weights. The problem was that

the weights on any extra layers could not be adapted to produce significant

improvements in learning. However, within the last few years, Geoffrey Hinton

and colleagues from the University of Toronto, and researchers Li Deng and

Dong Yu from Microsoft Research have shown that much deeper layered net-

works can not only be trained efficiently but can also deliver significantly

improved learning outcomes. This

deep learning

approach is currently causing

great excitement in the machine-learning community and is already leading to

new commercial applications.

Hidden layer

Input layer

Output layer

X

1

Y

1

X

2

Y

2

X

3

Weights

Fig. 13.18. An example of a three-layer

ANN, with all connections between

layers. The output of the neural network

is specified by the connectivity of the

neurons, the weights on the connec-

tions, the input signals, and the thresh-

old function.

B.13.9. Geoffrey Hinton is a com-

puter scientist based in Toronto

who was one of the first computer

scientists to show how to make com-

puters “learn” more like a human

brain. He has recently participated

in exciting advances using so-called

deep neural networks. His start-up

company on such approaches to

computer learning and recognition

problems was bought by Google

in 2013. Hinton is the great-great-

grandson of logician George Boole.

Photo by Emma Hinton

Search WWH ::

Custom Search