Information Technology Reference

In-Depth Information

Fig. 13.16. Representation of an artificial

neuron with inputs, connection weights,

and the output subject to a threshold

function.

Inputs

Weights

W

1

I

1

W

2

I

2

Output

Sum

W

3

∑

I

3

Threshold

W

4

I

N

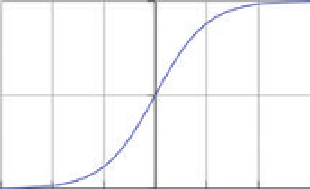

of McCulloch and Pitts, the input could only be either 0 or 1. In addition,

each input “dendrite” had an associated “weight” that was either +1 or -1 to

represent inputs that tended either to excite the neuron to fire or to inhibit

the neuron from firing, respectively. The model calculated the weighted

sum of the inputs - the sum of each input multiplied by its weight - and

checked whether this sum was greater or smaller than the threshold value.

If the weighted sum was greater than the threshold, the model neuron fired

and emitted a 1 on its axon. Otherwise, the output remained 0. Rosenblatt's

perceptron model allowed both the inputs to the neurons and the weights to

take on any value. In addition, the simple activation threshold was replaced

by a smoother

activation function

, a mathematical function used to transform

the activation level of the neuron into an output signal, such as the function

shown in

Figure 13.17

. ANNs are just interconnected layers of perceptrons as

shown in

Figure 13.18

.

For numerical calculations, computers are very much faster than

humans at performing arithmetic. For tasks involving

pattern recognition -

the automatic identification of figures, shapes, forms, or patterns to recog-

nize faces, speech, handwriting, objects, and so on - even young children

are still very much better than the most powerful computers. The hope for

ANN research is that by mimicking how our brains learn, these artificial

networks can be trained to recognize patterns. The study of ANNs is some-

times called

connectionism

.

The publication of a famous topic

Perceptrons

in 1969 by Marvin Minsky

and Seymour Papert from MIT dashed early hopes for progress with neural net-

works. Minsky and Papert showed that a simple two-layer perceptron network

was incapable of learning some very simple patterns. While they did not rule

out the usefulness of multilayer perceptron networks with what they called

“hidden” layers, they pointed out “the lack of effective learning algorithms”

22

for such networks. This situation changed in the 1980s with the discovery of

just such an effective learning algorithm. A very influential paper in the jour-

nal

Nature

gave the algorithm its name: “Learning Representations by Back-

Propagating Errors” by David Rumelhart, Geoffrey Hinton (

B.13.9

), and Ronald

Williams. Let us see how this

back-propagation

algorithm enables neural net-

works to learn.

1

0.5

0

-6 -4 -2

Fig. 13.17. A simple threshold function

for an artificial neuron. The strength of

the output signal depends on the magni-

tude of the sum of the input signals.

0

2

4

6

Search WWH ::

Custom Search