Information Technology Reference

In-Depth Information

hypertext links from page to page. When he or she arrives at a web page

with multiple links, the surfer randomly chooses one of the links. We can

now attempt to calculate the importance of each page by counting how many

times the random surfer visits each site. To avoid the problem of

buckets

-

cycles where the surfer can go around forever - Page and Brin introduced a

“teleportation” probability. At each page the surfer visits, there is a chance

that he or she does not follow one of the links from the page but instead

jumps to an entirely new web page chosen at random. The surfer then uses

this page as a new starting point for continued surfing. In addition, to avoid

the problem of the surfer getting stuck in a so-called

dangling node

, a page

with no outgoing web links, Page and Brin also allowed the surfer to jump to

a random new page from such nodes. We can simulate the action of the ran-

dom surfer on a network of web links and find out which pages the surfer vis-

its most often. The importance score calculated in this way takes into account

both the number of hyperlinks pointing to a web page and the authority of

the linking web page. The method works in the presence of link cycles and

dangling nodes and is able to deliver meaningful and stable values for each

page's importance.

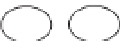

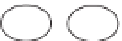

Figure 11.22a

shows a network of eleven web pages and their associ-

ated links. A computer simulation of the random surfer algorithm gives the

PageRank score shown in

Figure 11.22b

. In practice, the computation of the

PageRank score is not done by performing a computer simulation of the surf-

er's travels. Instead, the problem can be formulated as solving a mathematical

problem involving

sparse matrices

. A

matrix

is an array of numbers or symbols

arranged in rows and columns, and

sparse

just means that the matrix has many

elements that are zero. The matrices concerned are huge, with many billions

of rows, but fortunately fast computational methods exist that can be used to

find the PageRank scores.

Armed with the PageRank algorithm, by early 1997 Page had developed

a prototype search engine that he called “BackRub” because it analyzed the

incoming or “back” links to web pages to calculate a page's importance. While

other searches relied on the content and structure indexes, BackRub added the

dimension of importance to rank pages in order of likely relevance. By the end

of the year, Page and his officemate at Stanford, Sean Anderson, were brain-

storming for a new name for the search engine. They decided on the name

Google, thinking the word

google

meant a very large number. Page registered

Google.com the same evening only to find out next day that the word they

were thinking of was spelled

googol

, meaning the number 1 followed by one

hundred zeros. With their search engine now being used by Stanford students

and faculty, Page and Brin needed to beg and borrow as many computers as

they could to keep up with both the growth of users and that of the web. Page's

dorm room became the first Google data center, crammed with inexpensive

PCs (

Fig. 11.23

). Today Google has data centers around the world that run hun-

dreds of thousands of servers (

Fig. 11.24

).

Having demonstrated the superiority of a search engine using the PageRank

algorithm to calculate a web page's importance, Page and Brin tried to inter-

est AltaVista in buying the rights to their system. Paul Flaherty, one of the

originators of the AltaVista search engine, was impressed with their link-based

approach to page ranking. Nevertheless, Flaherty came back to them saying

L

M

H

I

G

E

D

F

B

A

C

(a)

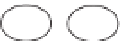

H(1.62)

I(1.62)

G(1.62)

L(1.62)

M(1.62)

E(8.08)

D(3.91)

F(3.91)

A(3.28)

B(38.42)

C(34.31)

(b)

Fig. 11.22. (a) An example of a set of web

pages -

nodes

- showing the hypertext

links connecting the different pages with

the direction of each link marked by an

arrow. Web page A is known as a

dan-

gling node

because this has no outgoing

links. Pages B and C are said to form a

bucket

, a reachable strongly connected

pair of pages with no outgoing links to

the rest of the pages of the graph. (b)

The importance of each page can be

calculated using the PageRank algorithm.

For this graph we have calculated the

importance of each page using the

ran-

dom surfer

method although in practice

other methods are used. The scores have

been normalized to add up to 100. Page

C receives just one link but this is from

the most important page. Page C ends

up being more important than page

E - which receives more links but all but

one from pages G, H, M, L, and I, which

all have no incoming links and therefore

have the minimum importance.

Search WWH ::

Custom Search