Information Technology Reference

In-Depth Information

Such simulations have now become an essential

element of car design with detailed crash simula-

tion using models of the driver and passengers.

By the 1980s microprocessors based on new

transistor technology (CMOS) started to appear as

alternative building blocks of supercomputers.

Cray was rather skeptical and when he was asked

whether he had considered building the next

generation of Cray computers on the new com-

ponents he famously said “If you have a heavy

load to move. What would you rather use a pair of

oxen or hundred of chicken.”

19

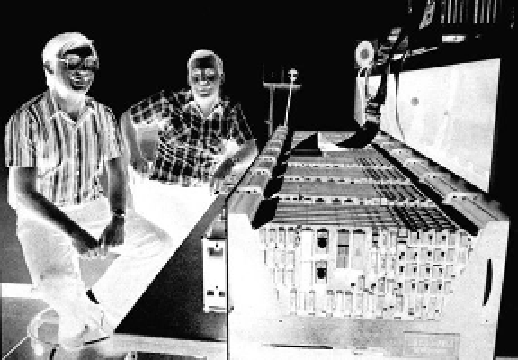

However, in the early 1980s, Geoffrey Fox

and Chuck Seitz at Caltech put together a paral-

lel computer called the Cosmic Cube. In essence,

this was a collection of IBM PC boards, each

with an Intel microprocessor and memory, con-

nected together by a so-called

hypercube network

.

The importance of the Cosmic Cube experience

was that Fox and Seitz demonstrated for the first

time that it was both feasible and realistic to use

such “distributed memory” parallel computers to

solve challenging scientific problems. In this case,

programmers need to exploit domain parallelism

as shown in

Figure 7.20b

. Instead of the latency

caused by filling and emptying a vector pipeline,

the overhead in such distributed memory programs comes from the need to exchange information at the

boundaries of the domains since the data is subdivided among the different nodes of the machine. This style

of parallel programming is called

message passing

.

Today, all the highest performance computers use such a distributed memory, message passing archi-

tecture, albeit with a variety of different types of networks connecting the processing nodes. Instead of

the Cray-1's eighty Mflop/s peak performance, we now have distributed memory supercomputers with top

speeds of teraflop/s and petaflop/s, while gigaflop/s performance is now routinely available on a laptop! The

supercomputing frontier is to break the exaflop/s barrier and there are now U.S., Japanese, European, and

Chinese companies taking on this challenge. In answer to Cray's sarcastic comment about hundreds of

chickens, Eugene Brooks III, one of the original Cosmic Cube team at Caltech, characterized the success of

commodity-chip based, distributed memory machines as “the attack of the killer micros.”

20

Fig. 7.24. Geoffrey Fox and Chuck Seitz pictured next to the Caltech

Cosmic Cube machine. This parallel computer became operational

in October 1983 and contained sixty-four nodes each with 128

kilobyte memory. A computing node was made up from an Intel

8086 processor with an 8087 coprocessor for fast floating-point

operations. The nodes were linked together in a so-called hyper-

cube topology - several cubes connected together - for minimizing

communication delays between nodes. The size of the computer

was only six cubic feet and drew less than a kilowatt of power. The

Cosmic Cube and its successors represented a serious challenge for

the Cray vector supercomputers. Today all the highest-performing

machines use a distributed memory message-passing architecture

similar to the Caltech design.

Search WWH ::

Custom Search