Environmental Engineering Reference

In-Depth Information

formula of the SGD algorithm to improve training efficiency.

Momentum is a variable used to reduce the training time

and avoid oscillations around the minimum. While the SGD

algorithm adjusts the weights along the steepest gradient descent

of the performance function, the GDM algorithm concerns

both the current gradient descent and the recent changes of

the weights. Therefore, the GDM algorithm can use a larger

learning rate to speed up the training process with lower risk of

oscillations.

The SGD and GDM algorithms consider both the magnitude

and the sign of the gradient of the performance function. These

two algorithms usually take a long time to converge. The resilient

propagation (RP) algorithm proposed by Riedmiller and Braun

(1993) attempts to speed up the training process by eliminating

the negative impacts of the magnitudes of the partial derivatives.

It uses the sign of the derivatives of the classification error to

determine the direction of the weight update. The magnitude

of the weight update is defined by an individual variable which

increases when the derivatives for two successive iterations have

the same sign, otherwise decreases. Generally, the RP algorithm

is much faster than other back-propagation training algorithms.

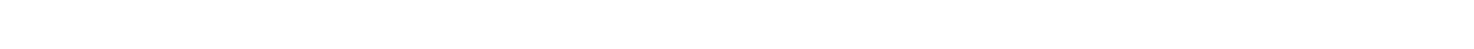

y

y

T<c

T<c

1

1

T>c

T > c

0

x

0

x

−

1

A

B

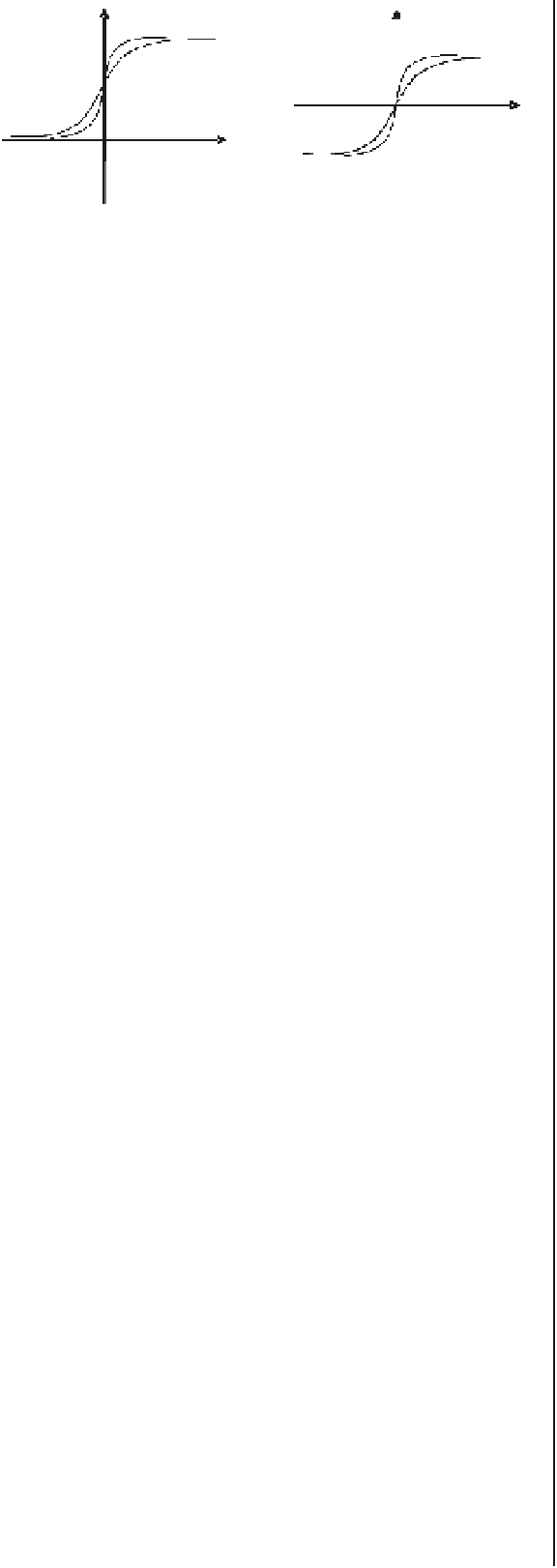

FIGURE 7.2

Curves of activation functions as related to

different threshold (T) values, given that c is any con-

stant between 0 and 1. (A) log-sig function and (B) tan-sig

function.

most commonly used (Dawson and Wilby, 2001). There is a

rich pool of training algorithms varying by their origins, local

or global perspective, or learning mode. They are rooted in

various techniques, such as optimum filtering, neurobiology, or

statistical mechanics. Local learning algorithms adjust weights by

using localized input signals and localized derivative of the error

function, while global algorithms consider all input signals. Based

on the learning mode, training algorithms can be grouped into

either a supervised, unsupervised, or hybrid paradigm. Reviews

on these algorithms are given elsewhere (e.g., Bishop, 1995; Jain,

Mao and Mohiuddin, 1996; Haykin, 1999; Principe, Euliano and

Lefebvre, 2000). Here, we focus on three groups of supervised

training methods that have been commonly used to train the

MLP neural networks: back-propagation, conjugate gradient,

and quasi-Newton methods; they differ in the ways by which the

direction and magnitude of weight adjustments are calculated

(Table 7.2).

7.2.3.2

Conjugate gradient method

The basic back-propagation method adjusts the weights along

the steepest descent direction. However, this can be quite time-

consuming due to the fixed learning rate. Rather than using the

magnitude of the gradient descent and a user-defined learning

rate, the conjugate gradient method employs a series of line

searches along conjugate directions to determine the optimal

step size of the weight update, thus allowing fast convergence.

Specifically, the conjugate gradient training comprises two steps.

The first step is to search the local minimum in error along a

certain search direction; the weights will be adjusted to the local

minimum point. The second step is to compute the conjugate

of the previous search direction as the new search direction; the

global minimum error is approached through iterations. This

group of training method includes several commonly used algo-

rithms, such as Fletcher-Reeves (CGF), Polak-Ribiere (CGP),

Powell-Beale (CGB), and scaled conjugate gradient (SCG) algo-

rithms (see Table 7.2).

The CGF algorithm calculates the mutually conjugate direc-

tions of searchwith respect to theHessianmatrix directly fromthe

function evaluation and the gradient evaluation, but without the

direct evaluation of the Hessian of the function. It computes

the coefficient (beta) as a ratio of the norm squared of the current

gradient to the norm squared of the previous gradient. The CGP

algorithm is similar to the CGF algorithm, differing only in the

coefficient (beta) computation. It computes beta as the inner

product of the previous change in the gradient with the current

gradient divided by the norm squared of the previous gradient.

Using the same learning model used by the CGF algorithm to

compute the conjugate direction, the CGB algorithm, however,

resets the search direction to the negative of the gradient if the

error function is non-quadratic. Finally, the SCG algorithm is a

variation of the conjugate gradient method with a scaled step size.

It combines the model-trust region approach with the conjugate

gradient approach to scale the step size.

7.2.3.1

Back-propagation method

Due to its transparency and effectiveness, the back-propagation

method has been widely used in neural network training (Duda,

Hart and Stork, 2001). It adjusts the weights of networks through

iterations based upon training samples and their desired output.

On each iteration, the derivatives of the classification error as

functions of the weights are computed to determine the direction

andmagnitude of theweight update. Through these iterations, the

weights of networks are gradually optimized. Several commonly

used back-propagation training algorithms include the steepest

gradient descent (SGD), the gradient descent with momentum

(GDM), and the resilient propagation (RP) algorithms.

The SGD algorithm is the simplest back-propagation algo-

rithm, which determines the direction and magnitude of the

weights by the derivatives of the classification error with respect

to any weight. It incorporates a user-defined learning rate. It is

difficult to choose an appropriate learning rate. If the learning

rate is set too large, the algorithm may end up with oscillations

around the minimum error; otherwise, the training may be inef-

ficient. In addition, a fixed learning rate causes small changes in

the weights even though the weights are far from their optimal

values. Consequently, this algorithmusually takesmore iterations

to converge.

The GDM algorithm is an advanced gradient descent

approach adding a momentum item in the weight adjustment

Search WWH ::

Custom Search