Environmental Engineering Reference

In-Depth Information

TABLE 7.1

List of some commonly-used neural network types in machine learning.

Brief description

a

No

Network types

Multilayer feed-forward perceptron

networks

They comprise an input-hidden-output layered structure, and the input signal

propagates through the network in a forward direction. They are the most

widely used networks.

1

Radial basis function networks

They use radial basis functions to replace the sigmoidal hidden layer transfer

function in multilayer perceptrons, thus transferring the design of a neural

network as a curve-fitting problem in a high-dimensional space.

2

Self-organizing networks

They use a supervised or an unsupervised learning method to transform an

input signal pattern of arbitrary dimension into a lower dimensional (usually

one or two dimensional) space with topological information preserved as

much as possible.

3

Adaptive resonance theory (ART)

networks

They provide the functionality for creating and using a supervised or un

unsupervised neural network based on the Adaptive Resonance Theory.

4

Recurrent network (e.g., Hopfield

network)

Contrary to feed-forward networks, recurrent neural networks use

bi-directional data flow and propagate data from later processing stages

to earlier stages.

5

Modular neural networks (e.g.,

Committee of machine)

They use several small networks that cooperate or compete to solve

problems.

6

Stochastic neural networks (e.g.,

Boltzmann machine)

This type of networks introduces random variations, often viewed as a form

of statistical sampling, into the networks.

7

Dynamic neural networks

They not only deal with non-linear multivariate behavior, but also include

learning of time-dependent behavior.

8

Neuro-fuzzy networks

They are a fuzzy inference system in the body which introduces the

processes such as fuzzification, inference, aggregation and defuzzification

into a neural network.

9

a

Detailed discussions on these neural network types are given elsewhere (e.g., Bishop,1995; Haykin, 1999; Duda, Hart and Stork, 2001).

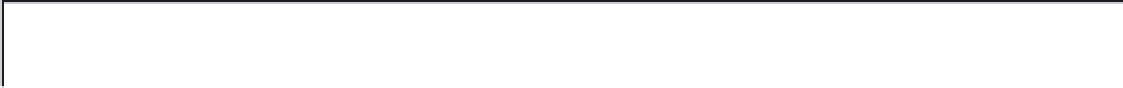

Neuron or

Processing

Element (PE)

Weighted link

Output Layer

Input Layer

Hidden Layers

FIGURE 7.1

A fully-connected multilayer perceptron (MLP) neutral network with a 4 × 5 × 4 × 2 structure. This is a feed-

forward architecture. Data flow starts from the neurons in the input layer and moves along weighted links to neurons in the

hidden layers for processing. Each hidden or output neuron contains a linear discriminant function that combines information

from all neurons in the preceding layers. The output layer is a complex function of inputs and internal network transformations.

Search WWH ::

Custom Search