Information Technology Reference

In-Depth Information

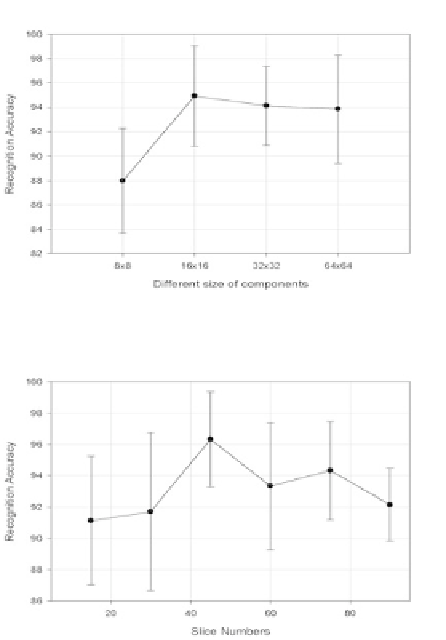

Fig. 4.

Performance comparison (%) with features from different size of components

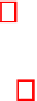

Fig. 5.

Performance comparison (%) using AdaBoost on different slice numbers with multi-

classifier fusion (using median rule)

classifiers, i.e. three support vector machines (SVM) based on linear kernel, gaussian

kernel and poly kernel, a boosting classifier (Boosting) and a Fisher linear discriminant

classifier (FLD) were chosen as individual classifiers. These classifiers performed better

in our experiments than a Bayesian classifier and k-nearest neighbor classifier.

For boosting the performance of each individual classifier, three decision rules, i.e.

median rule, mean rule, and product rule, are investigated for multi-classifier fusion.

The average accuracies are

for the mean, median and

product rule, respectively. Comparing with Fig. 4, the performance of multi-classifier

fusion is increased by

94

.

39%

,

95

.

19%

,and

94

.

39%

when using the median rule, while the two other rules

cannot boost the performance of individual classifiers for this dataset.

Fig. 5 lists the results for feature selection by AdaBoost on different number of slices

with multi-classifier fusion (using median rule). It can be observed that the average

performance gets the best rate (

0

.

27%

) with 45 slices.

Table 1 compares our methods: CSF, CSF with

m

ulti-

c

lassifier fusion (CSFMC),

B

oosted CSF with

m

ulti-

c

lassifier fusion (BCSFMC), and some other methods, pro-

viding the overall results obtained with Cohn-Kanade Database in terms of the number

of people (PN), the number of sequences (SN), expression classes (CN), with different

96

.

32%

Search WWH ::

Custom Search